- Vulkan® Guide

- 1. Logistics Overview

- 2. What is Vulkan?

- 3. What Vulkan Can Do

- 4. Vulkan Specification

- 5. Platforms

- 6. Checking For Vulkan Support

- 7. Versions

- 8. Vulkan Release Summary

- 9. What is SPIR-V

- 10. Portability Initiative

- 11. Vulkan CTS

- 12. Development Tools

- 13. Vulkan Validation Overview

- 14. Vulkan Decoder Ring

- 15. Using Vulkan

- 16. Loader

- 17. Layers

- 18. Querying Properties, Extensions, Features, Limits, and Formats

- 19. Enabling Extensions

- 20. Enabling Features

- 21. Using SPIR-V Extensions

- 22. Formats

- 23. Queues

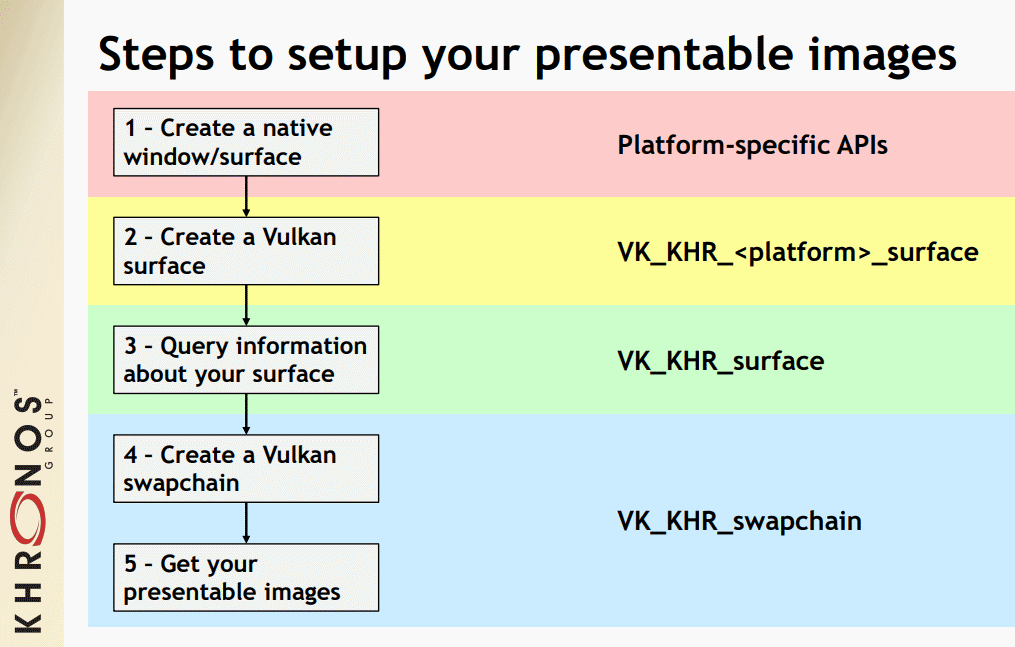

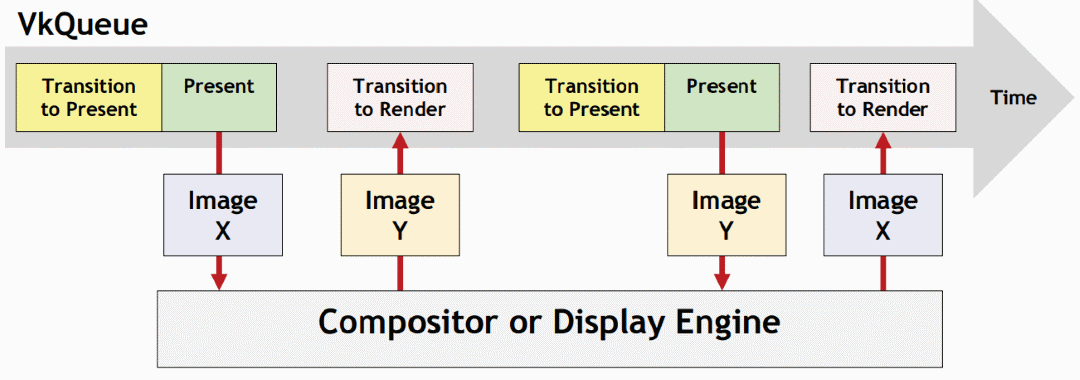

- 24. Window System Integration (WSI)

- 25. pNext and sType

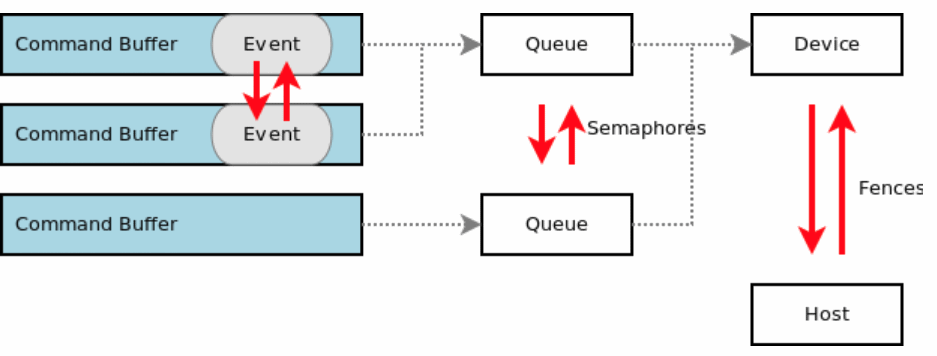

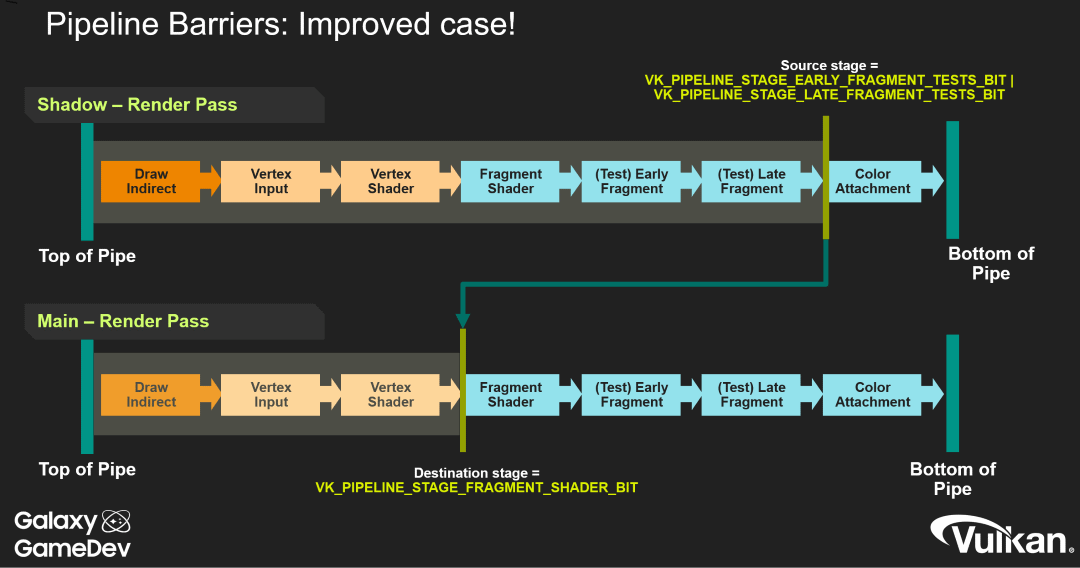

- 26. Synchronization

- 27. VK_KHR_synchronization2

- 27.1. Rethinking Pipeline Stages and Access Flags

- 27.2. Reusing the same pipeline stage and access flag names

- 27.3. VkSubpassDependency

- 27.4. Splitting up pipeline stages and access masks

- 27.5. VK_ACCESS_SHADER_WRITE_BIT alias

- 27.6. TOP_OF_PIPE and BOTTOM_OF_PIPE deprecation

- 27.7. Making use of new image layouts

- 27.8. New submission flow

- 27.9. Emulation Layer

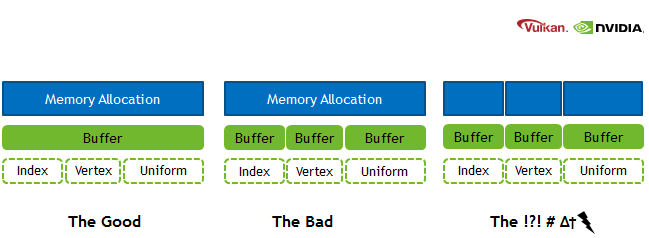

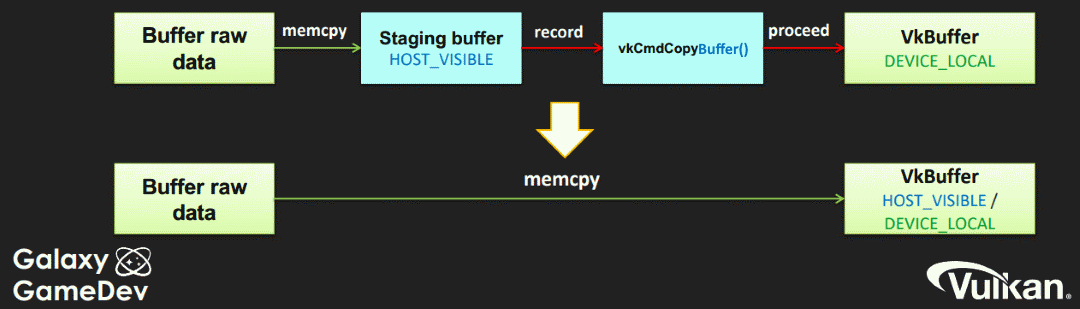

- 28. Memory Allocation

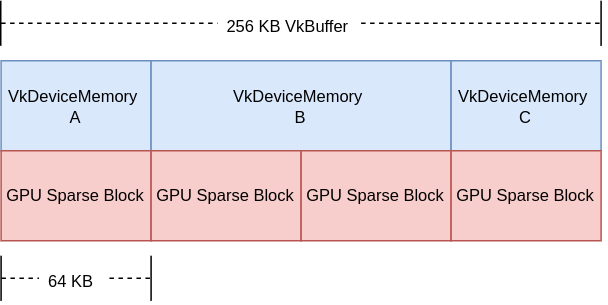

- 29. Sparse Resources

- 30. Protected Memory

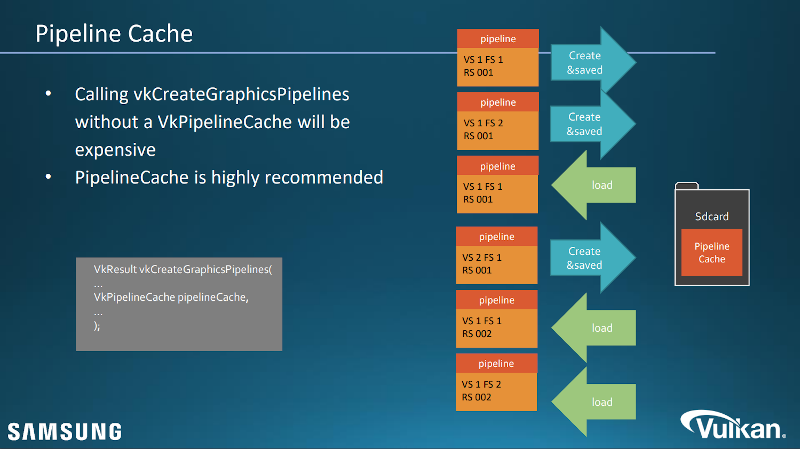

- 31. Pipeline Cache

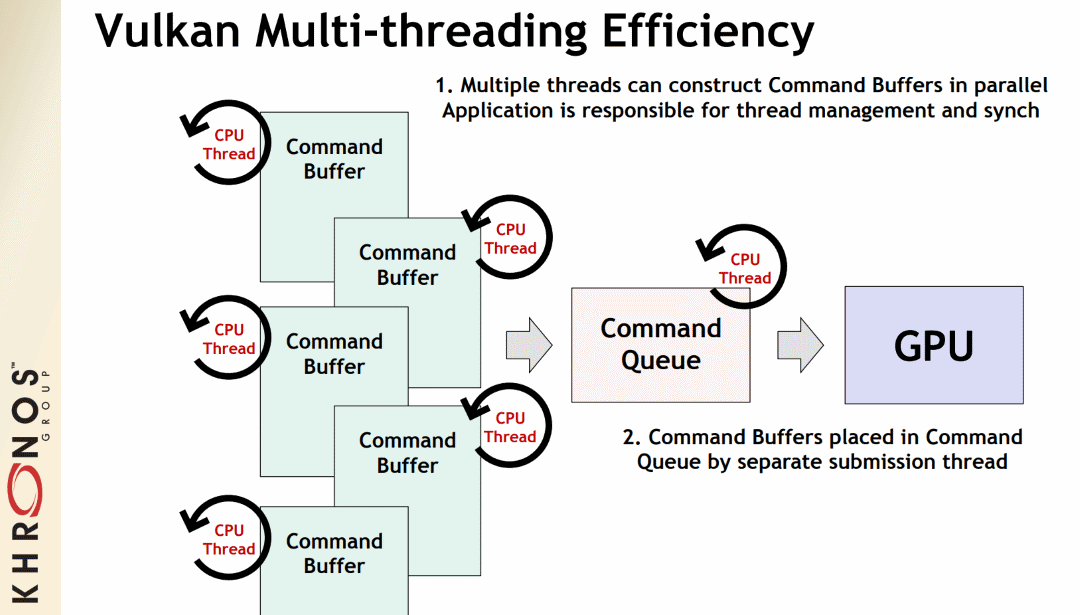

- 32. Threading

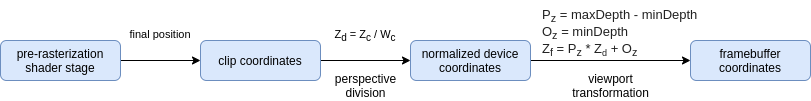

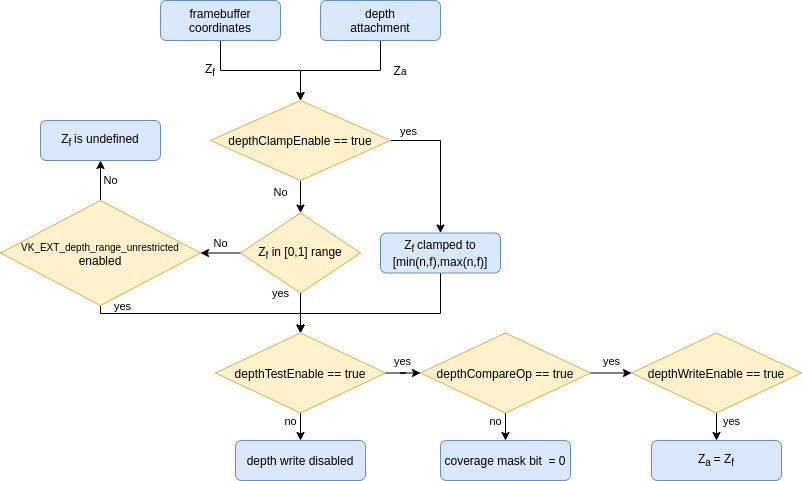

- 33. Depth

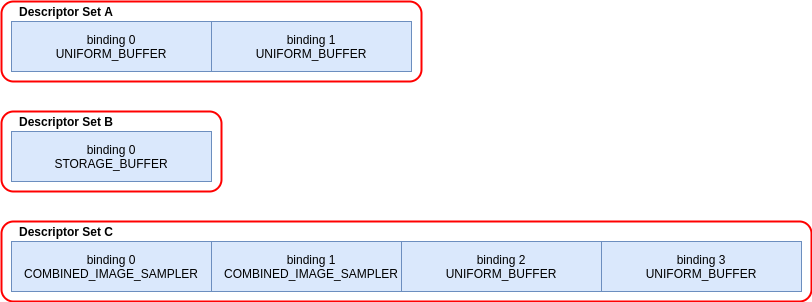

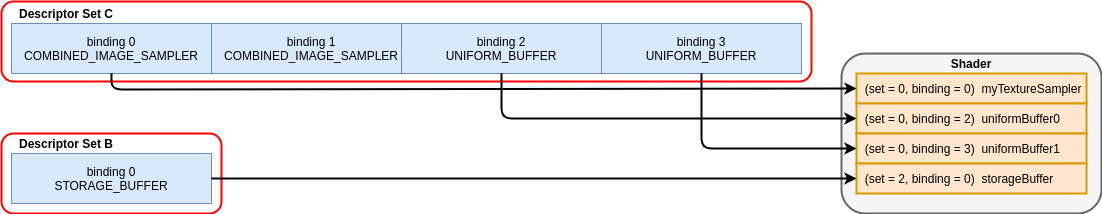

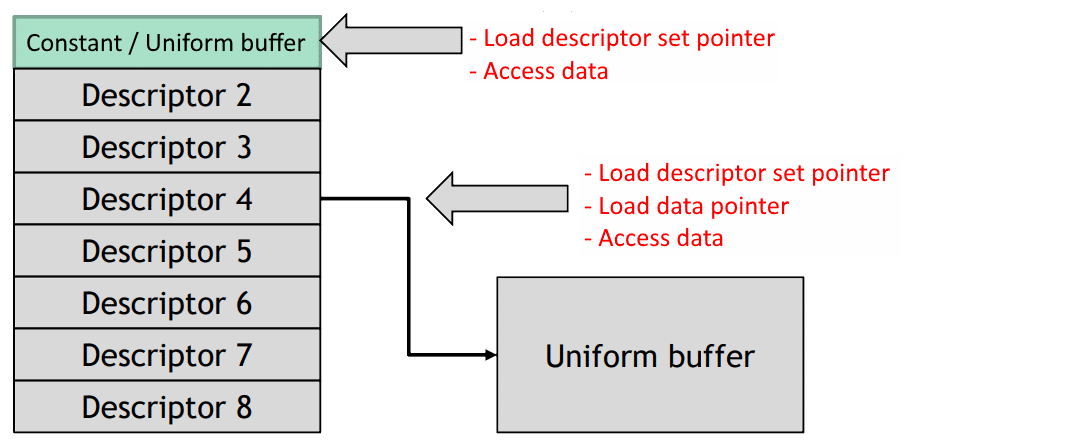

- 34. Mapping Data to Shaders

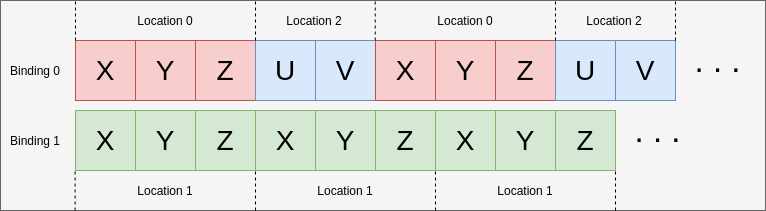

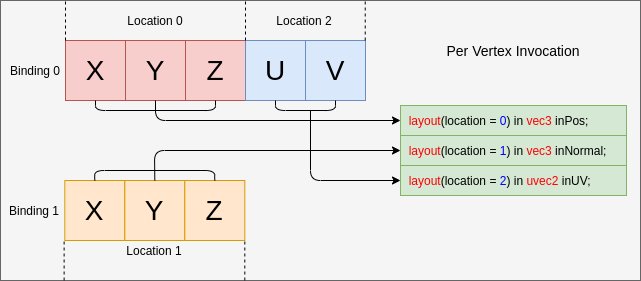

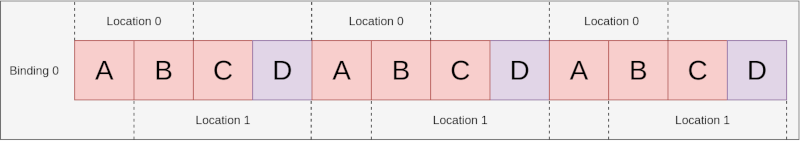

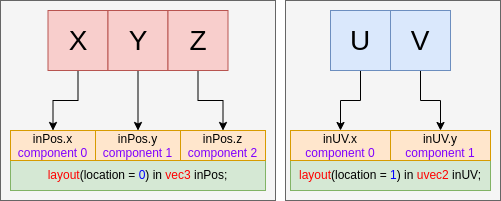

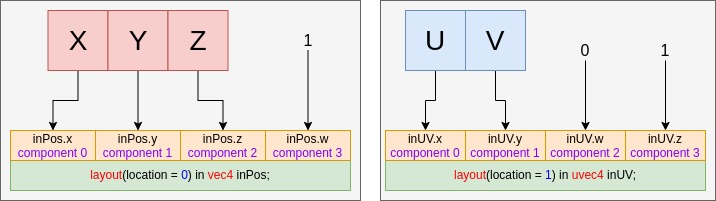

- 35. Vertex Input Data Processing

- 36. Descriptor Dynamic Offset

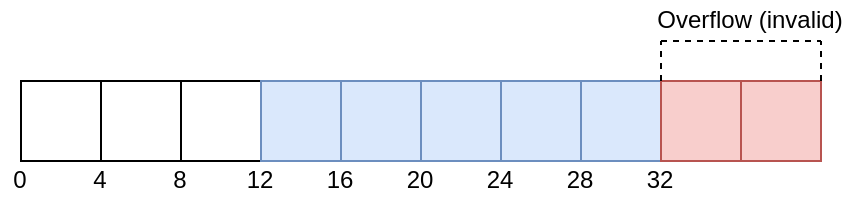

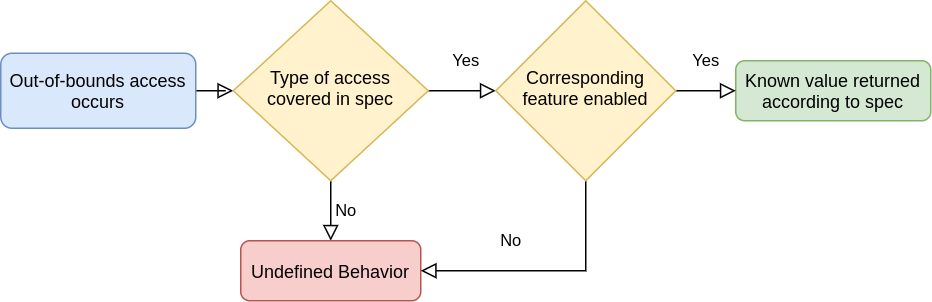

- 37. Robustness

- 38. Pipeline Dynamic State

- 39. Subgroups

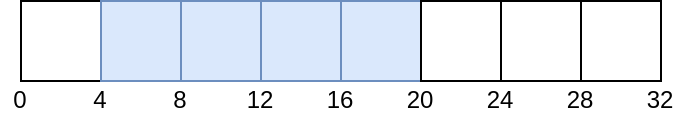

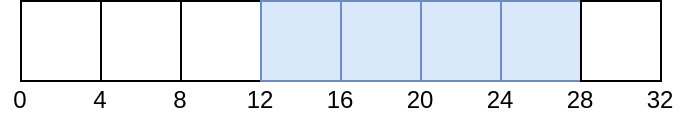

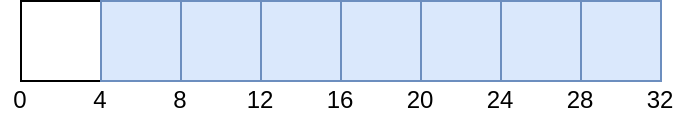

- 40. Shader Memory Layout

- 41. Atomics

- 42. Common Pitfalls for New Vulkan Developers

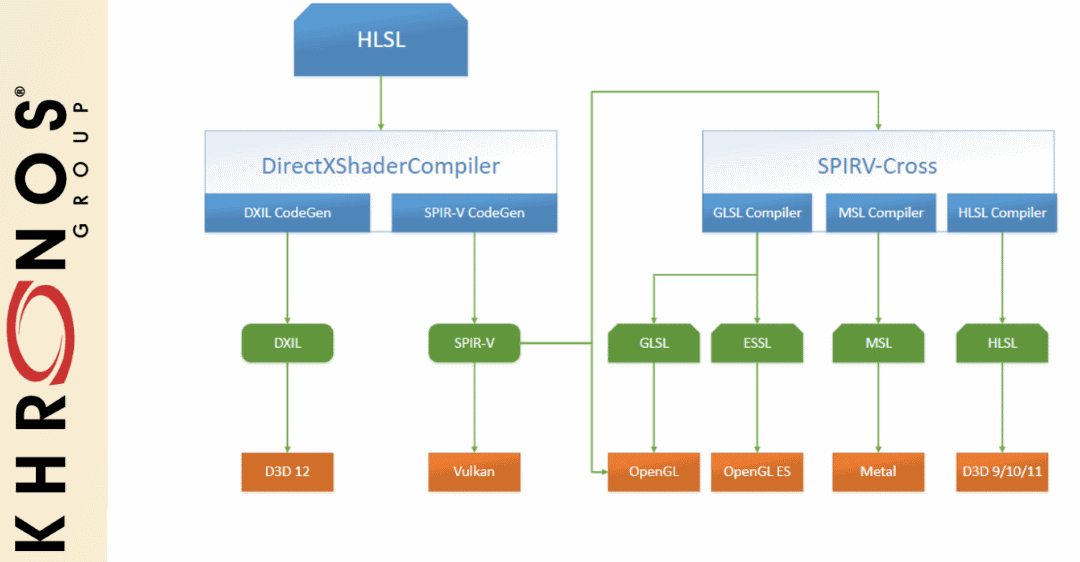

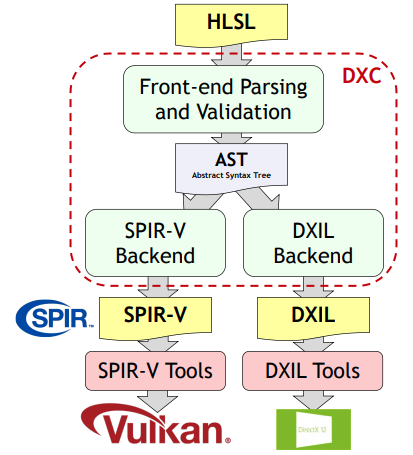

- 43. HLSL in Vulkan

- 44. When and Why to use Extensions

- 45. Cleanup Extensions

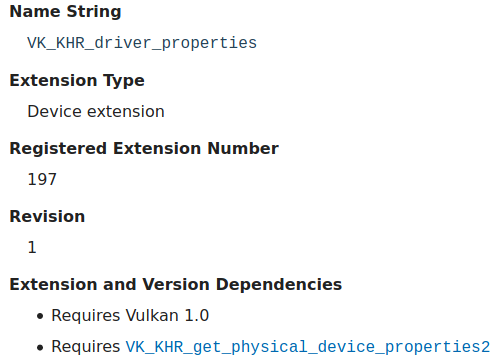

- 45.1. VK_KHR_driver_properties

- 45.2. VK_EXT_host_query_reset

- 45.3. VK_KHR_separate_depth_stencil_layouts

- 45.4. VK_KHR_depth_stencil_resolve

- 45.5. VK_EXT_separate_stencil_usage

- 45.6. VK_KHR_dedicated_allocation

- 45.7. VK_EXT_sampler_filter_minmax

- 45.8. VK_KHR_sampler_mirror_clamp_to_edge

- 45.9. VK_EXT_4444_formats and VK_EXT_ycbcr_2plane_444_formats

- 45.10. VK_KHR_format_feature_flags2

- 45.11. VK_EXT_rgba10x6_formats

- 45.12. Maintenance Extensions

- 45.13. pNext Expansions

- 46. Device Groups

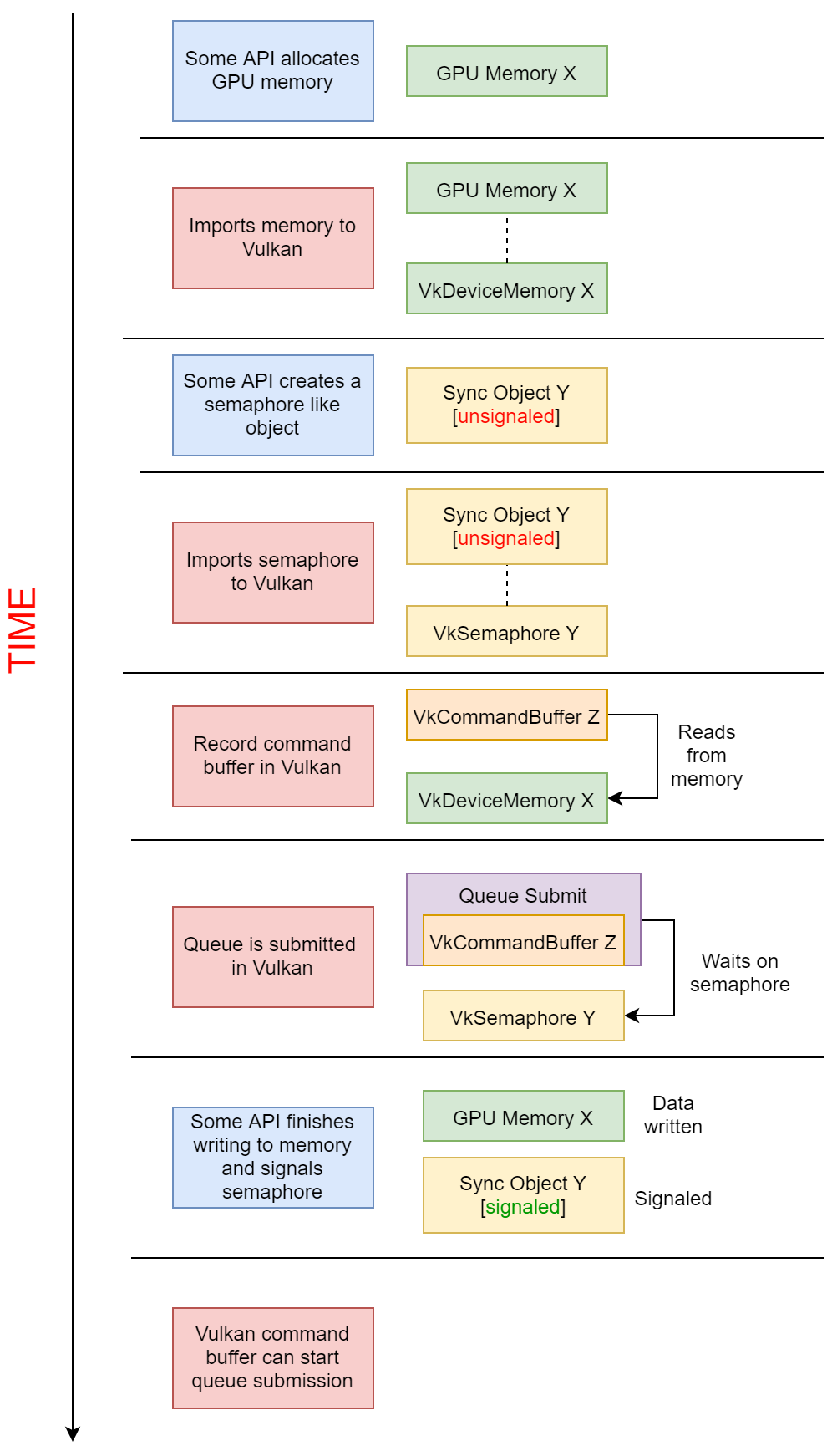

- 47. External Memory and Synchronization

- 48. Ray Tracing

- 49. Shader Features

- 49.1. VK_KHR_spirv_1_4

- 49.2. VK_KHR_8bit_storage and VK_KHR_16bit_storage

- 49.3. VK_KHR_shader_float16_int8

- 49.4. VK_KHR_shader_float_controls

- 49.5. VK_KHR_storage_buffer_storage_class

- 49.6. VK_KHR_variable_pointers

- 49.7. VK_KHR_vulkan_memory_model

- 49.8. VK_EXT_shader_viewport_index_layer

- 49.9. VK_KHR_shader_draw_parameters

- 49.10. VK_EXT_shader_stencil_export

- 49.11. VK_EXT_shader_demote_to_helper_invocation

- 49.12. VK_KHR_shader_clock

- 49.13. VK_KHR_shader_non_semantic_info

- 49.14. VK_KHR_shader_terminate_invocation

- 49.15. VK_KHR_workgroup_memory_explicit_layout

- 49.16. VK_KHR_zero_initialize_workgroup_memory

- 50. Translation Layer Extensions

- 51. VK_EXT_descriptor_indexing

- 52. VK_EXT_inline_uniform_block

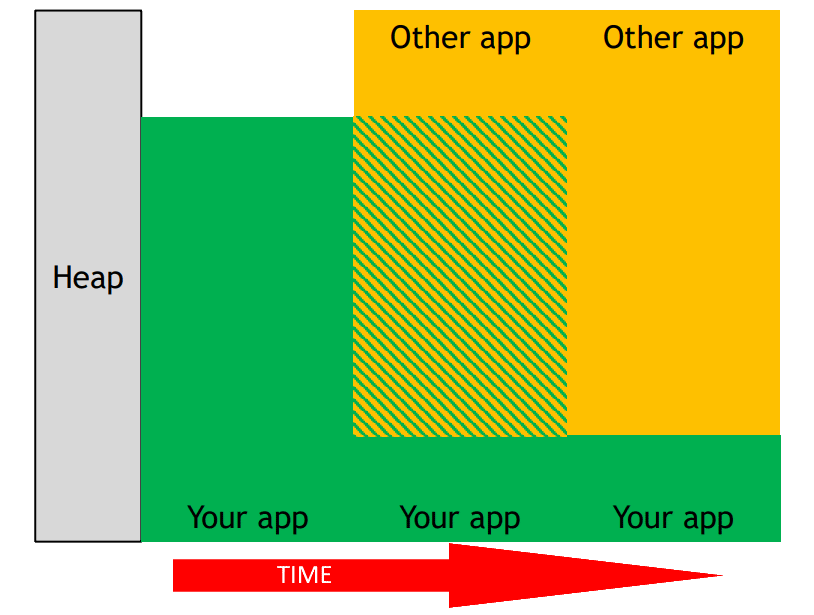

- 53. VK_EXT_memory_priority

- 54. VK_KHR_descriptor_update_template

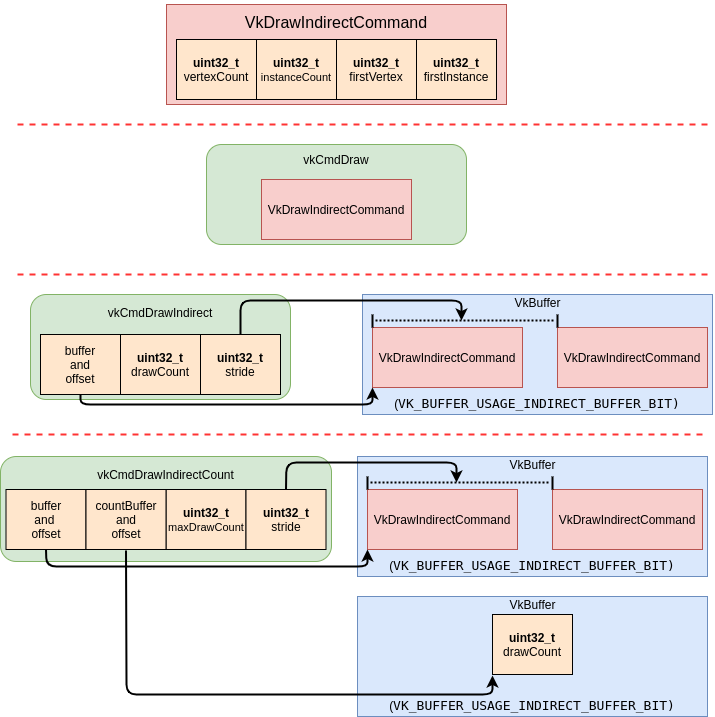

- 55. VK_KHR_draw_indirect_count

- 56. VK_KHR_image_format_list

- 57. VK_KHR_imageless_framebuffer

- 58. VK_KHR_sampler_ycbcr_conversion

- 59. VK_KHR_shader_subgroup_uniform_control_flow

- 60. Contributing

- 61. License

- 62. Code of conduct

permalink: /Notes/004-3d-rendering/vulkan/guide.html layout: post

Vulkan® Guide

The Khronos® Vulkan Working Group :data-uri: :icons: font :toc2: :toclevels: 2 :max-width: 100% :numbered: :source-highlighter: rouge :rouge-style: github

The Vulkan Guide is designed to help developers get up and going with the world of Vulkan. It is aimed to be a light read that leads to many other useful links depending on what a developer is looking for. All information is intended to help better fill the gaps about the many nuances of Vulkan.

1. Logistics Overview

permalink: /Notes/004-3d-rendering/vulkan/chapters/what_is_vulkan.html layout: default ---

2. What is Vulkan?

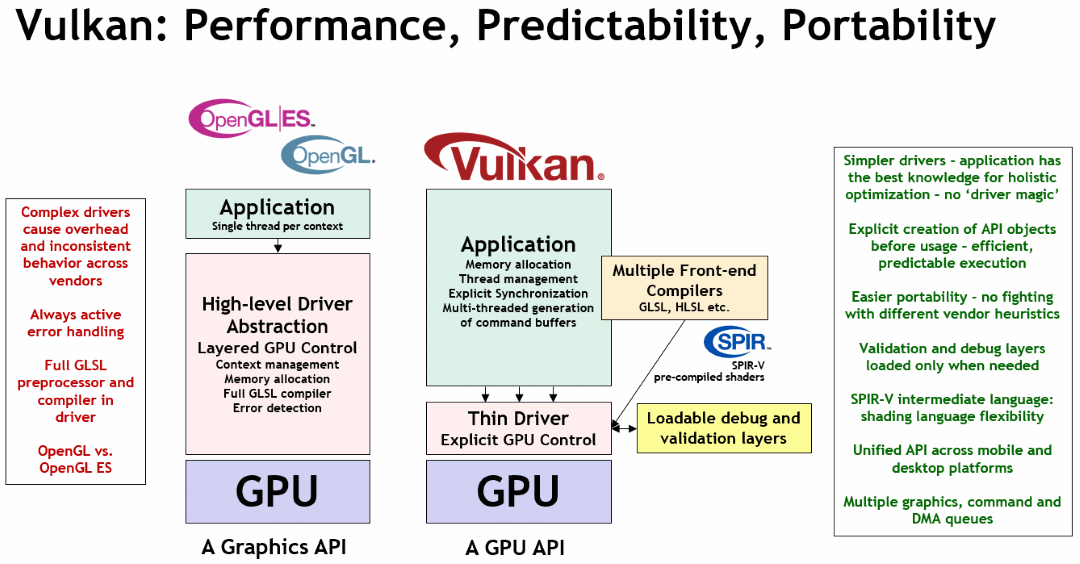

| Note | Vulkan is a new generation graphics and compute API that provides high-efficiency, cross-platform access to modern GPUs used in a wide variety of devices from PCs and consoles to mobile phones and embedded platforms. |

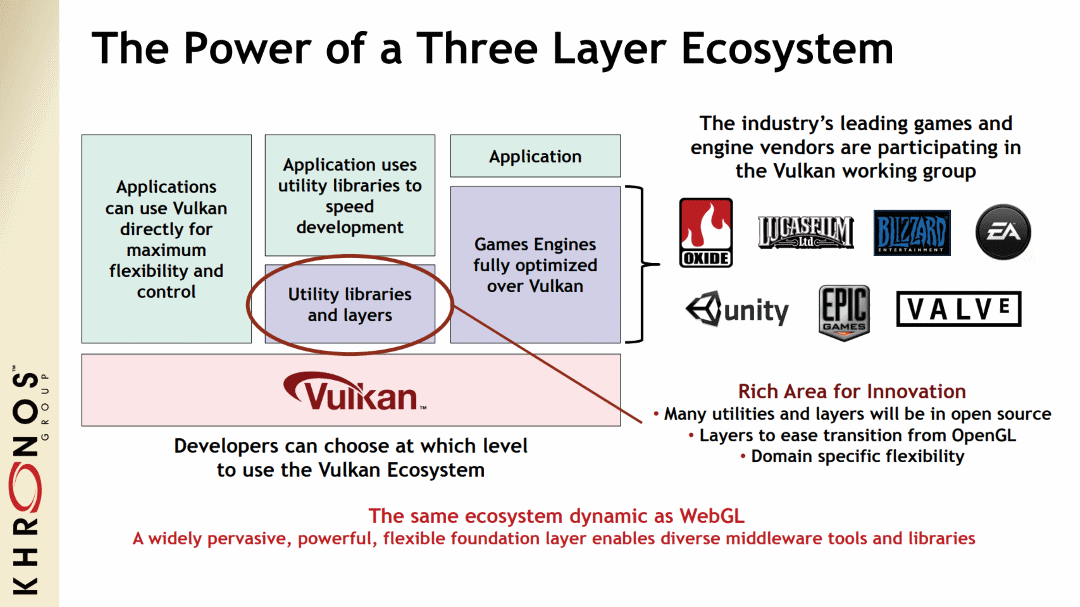

Vulkan is not a company, nor language, but rather a way for developers to program their modern GPU hardware in a cross-platform and cross-vendor fashion. The Khronos Group is a member-driven consortium that created and maintains Vulkan.

2.1. Vulkan at its core

At the core, Vulkan is an API Specification that conformant hardware implementations follow. The public specification is generated from the ./xml/vk.xml Vulkan Registry file in the official public copy of the Vulkan Specification repo found at Vulkan-Doc. Documentation of the XML schema is also available.

The Khronos Group, along with the Vulkan Specification, releases C99 header files generated from the API Registry that developers can use to interface with the Vulkan API.

2.2. Vulkan and OpenGL

Some developers might be aware of the other Khronos Group standard OpenGL which is also a 3D Graphics API. Vulkan is not a direct replacement for OpenGL, but rather an explicit API that allows for more explicit control of the GPU.

Khronos' Vulkan Samples article on "How does Vulkan compare to OpenGL ES? What should you expect when targeting Vulkan? offers a more detailed comparison between the two APIs.

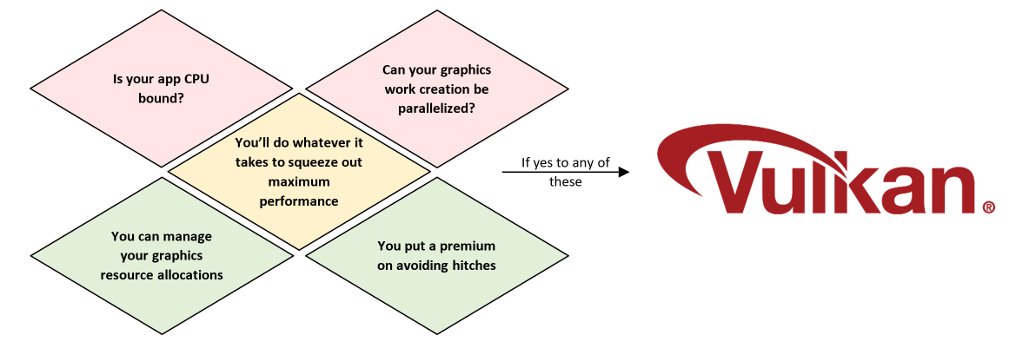

Vulkan puts more work and responsibility into the application. Not every developer will want to make that extra investment, but those that do so correctly can find power and performance improvements.

2.3. Using helping libraries

While some developers may want to try using Vulkan with no help, it is common to use some lighter libraries in your development flow to help abstract some of the more tedious aspect of Vulkan. Here are some libraries to help with development

2.4. Learning to use Vulkan

Vulkan is a tool for developers to create hardware accelerated applications. The Vulkan Guide tries to cover the more logistical material such as extensions, versions, spec, etc. For more information how to “use” Vulkan to create something such as the Hello World Triangle, please take a look at resources such as those found in Khronos' Vulkan “learn” page. If you want to get more hands-on help and knowledge, feel free to join the Khronos Developer Slack or the Khronos Community Forums as well!

permalink: /Notes/004-3d-rendering/vulkan/chapters/what_vulkan_can_do.html ---

3. What Vulkan Can Do

Vulkan can be used to develop applications for many use cases. While Vulkan applications can choose to use a subset of the functionality described below, it was designed so a developer could use all of them in a single API.

| Note | It is important to understand Vulkan is a box of tools and there are multiple ways of doing a task. |

3.1. Graphics

2D and 3D graphics are primarily what the Vulkan API is designed for. Vulkan is designed to allow developers to create hardware accelerated graphical applications.

| Note | All Vulkan implementations are required to support Graphics, but the WSI system is not required. |

3.2. Compute

Due to the parallel nature of GPUs, a new style of programming referred to as GPGPU can be used to exploit a GPU for computational tasks. Vulkan supports compute variations of VkQueues, VkPipelines, and more which allow Vulkan to be used for general computation.

| Note | All Vulkan implementations are required to support Compute. |

3.3. Ray Tracing

Ray tracing is an alternative rendering technique, based around the concept of simulating the physical behavior of light.

Cross-vendor API support for ray tracing was added to Vulkan as a set of extensions in the 1.2.162 specification. These are primarily VK_KHR_ray_tracing_pipeline, VK_KHR_ray_query, and VK_KHR_acceleration_structure.

| Note | There is also an older NVIDIA vendor extension exposing an implementation of ray tracing on Vulkan. This extension preceded the cross-vendor extensions. For new development, applications are recommended to prefer the more recent KHR extensions. |

3.4. Video

Vulkan Video has release a provisional specification as of the 1.2.175 spec release.

Vulkan Video adheres to the Vulkan philosophy of providing flexible, fine-grained control over video processing scheduling, synchronization, and memory utilization to the application.

| Note | feedback for the provisional specification is welcomed |

3.5. Machine Learning

Currently, the Vulkan Working Group is looking into how to make Vulkan a first class API for exposing ML compute capabilities of modern GPUs. More information was announced at Siggraph 2019.

| Note | As of now, there exists no public Vulkan API for machine learning. |

3.6. Safety Critical

Vulkan SC ("Safety Critical") aims to bring the graphics and compute capabilities of modern GPUs to safety-critical systems in the automotive, avionics, industrial and medical space. It was publicly launched on March 1st 2022 and the specification is available here.

| Note | Vulkan SC is based on Vulkan 1.2, but removed functionality that is not needed for safety-critical markets, increases the robustness of the specification by eliminating ignored parameters and undefined behaviors, and enables enhanced detection, reporting, and correction of run-time faults. |

permalink:/Notes/004-3d-rendering/vulkan/chapters/vulkan_spec.html layout: default ---

4. Vulkan Specification

The Vulkan Specification (usually referred to as the Vulkan Spec) is the official description of how the Vulkan API works and is ultimately used to decide what is and is not valid Vulkan usage. At first glance, the Vulkan Spec seems like an incredibly huge and dry chunk of text, but it is usually the most useful item to have open when developing.

| Note | Reference the Vulkan Spec early and often. |

4.1. Vulkan Spec Variations

The Vulkan Spec can be built for any version and with any permutation of extensions. The Khronos Group hosts the Vulkan Spec Registry which contains a few publicly available variations that most developers will find sufficient. Anyone can build their own variation of the Vulkan Spec from Vulkan-Docs.

When building the Vulkan Spec, you pass in what version of Vulkan to build for as well as what extensions to include. A Vulkan Spec without any extensions is also referred to as the core version as it is the minimal amount of Vulkan an implementation needs to support in order to be conformant.

4.2. Vulkan Spec Format

The Vulkan Spec can be built into different formats.

4.2.1. HTML Chunked

Due to the size of the Vulkan Spec, a chunked version is the default when you visit the default index.html page.

Prebuilt HTML Chunked Vulkan Spec

-

The Vulkan SDK comes packaged with the chunked version of the spec. Each Vulkan SDK version includes the corresponding spec version. See the Chunked Specification for the latest Vulkan SDK.

-

Vulkan 1.0 Specification

-

Vulkan 1.1 Specification

-

Vulkan 1.2 Specification

-

Vulkan 1.3 Specification

4.2.2. HTML Full

If you want to view the Vulkan Spec in its entirety as HTML, you just need to view the vkspec.html file.

Prebuilt HTML Full Vulkan Spec

-

The Vulkan SDK comes packaged with Vulkan Spec in its entirety as HTML for the version corresponding to the Vulkan SDK version. See the HTML version of the Specification for the latest Vulkan SDK. (Note: Slow to load. The advantage of the full HTML version is its searching capability).

-

Vulkan 1.0 Specification

-

Vulkan 1.1 Specification

-

Vulkan 1.2 Specification

-

Vulkan 1.3 Specification

4.2.3. PDF

To view the PDF format, visit the pdf/vkspec.pdf file.

Prebuilt PDF Vulkan Spec

-

Vulkan 1.0 Specification

-

Vulkan 1.1 Specification

-

Vulkan 1.2 Specification

-

Vulkan 1.3 Specification

4.2.4. Man pages

The Khronos Group currently only host the Vulkan Man Pages for the latest version of the 1.3 spec, with all extensions, on the online registry.

The Vulkan Man Pages can also be found in the VulkanSDK for each SDK version. See the Man Pages for the latest Vulkan SDK.

permalink:/Notes/004-3d-rendering/vulkan/chapters/platforms.html layout: default ---

5. Platforms

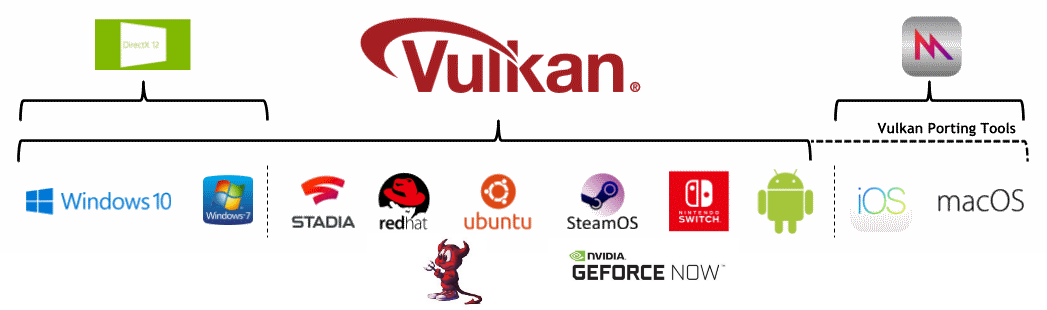

While Vulkan runs on many platforms, each has small variations on how Vulkan is managed.

5.1. Android

The Vulkan API is available on any Android device starting with API level 24 (Android Nougat), however not all devices will have a Vulkan driver.

Android uses its Hardware Abstraction Layer (HAL) to find the Vulkan Driver in a predefined path.

All 64-bit devices that launch with API level 29 (Android Q) or later must include a Vulkan 1.1 driver.

5.2. BSD Unix

Vulkan is supported on many BSD Unix distributions.

5.3. Fuchsia

Vulkan is supported on the Fuchsia operation system.

5.4. iOS

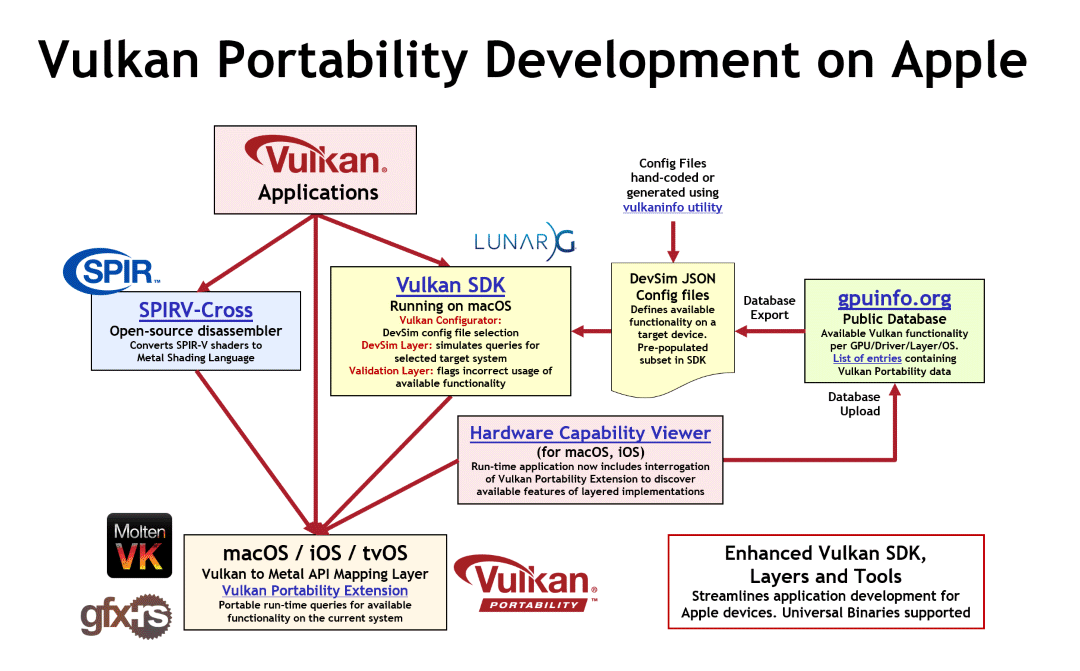

Vulkan is not natively supported on iOS, but can still be targeted with Vulkan Portability Tools.

5.5. Linux

Vulkan is supported on many Linux distributions.

5.6. MacOS

Vulkan is not natively supported on MacOS, but can still be targeted with Vulkan Portability Tools.

5.7. Nintendo Switch

The Nintendo Switch runs an NVIDIA Tegra chipset that supports native Vulkan.

5.8. QNX

Vulkan is supported on QNX operation system.

5.9. Stadia

Google’s Stadia runs on AMD based Linux machines and Vulkan is the required graphics API.

5.10. Windows

Vulkan is supported on Windows 7, Windows 8, and Windows 10.

5.11. Others

Some embedded systems support Vulkan by allowing presentation directly-to-display.

permalink: /Notes/004-3d-rendering/vulkan/chapters/checking_for_support.html ---

6. Checking For Vulkan Support

Vulkan requires both a Vulkan Loader and a Vulkan Driver (also referred to as a Vulkan Implementation). The driver is in charge of translating Vulkan API calls into a valid implementation of Vulkan. The most common case is a GPU hardware vendor releasing a driver that is used to run Vulkan on a physical GPU. It should be noted that it is possible to have an entire implementation of Vulkan software based, though the performance impact would be very noticeable.

When checking for Vulkan Support it is important to distinguish the difference between platform support and device support.

6.1. Platform Support

The first thing to check is if your platform even supports Vulkan. Each platform uses a different mechanism to manage how the Vulkan Loader is implemented. The loader is then in charge of determining if a Vulkan Driver is exposed correctly.

6.1.1. Android

A simple way of grabbing info on Vulkan is to run the Vulkan Hardware Capability Viewer app developed by Sascha Willems. This app will not only show if Vulkan is supported, but also all the capabilities the device offers.

6.1.2. BSD Unix

Grab the Vulkan SDK. Build Vulkan SDK using the command ./vulkansdk.sh and then run the vulkaninfo executable to easily check for Vulkan support as well as all the capabilities the device offers.

6.1.3. iOS

A simple way of grabbing info on Vulkan is to run the iOS port of the Vulkan Hardware Capability Viewer provided by LunarG. This app will not only show if Vulkan is supported, but also all the capabilities the device offers.

6.1.4. Linux

Grab the Vulkan SDK and run the vulkaninfo executable to easily check for Vulkan support as well as all the capabilities the device offers.

6.1.5. MacOS

Grab the Vulkan SDK and run the vulkaninfo executable to easily check for Vulkan support as well as all the capabilities the device offers.

6.1.6. Windows

Grab the Vulkan SDK and run the vulkaninfo.exe executable to easily check for Vulkan support as well as all the capabilities the device offers.

6.2. Device Support

Just because the platform supports Vulkan does not mean there is device support. For device support, one will need to make sure a Vulkan Driver is available that fully implements Vulkan. There are a few different variations of a Vulkan Driver.

6.2.1. Hardware Implementation

A driver targeting a physical piece of GPU hardware is the most common case for a Vulkan implementation. It is important to understand that while a certain GPU might have the physical capabilities of running Vulkan, it still requires a driver to control it. The driver is in charge of getting the Vulkan calls mapped to the hardware in the most efficient way possible.

Drivers, like any software, are updated and this means there can be many variations of drivers for the same physical device and platform. There is a Vulkan Database, developed and maintained by Sascha Willems, which is the largest collection of recorded Vulkan implementation details

| Note | Just because a physical device or platform isn’t in the Vulkan Database doesn’t mean it couldn’t exist. |

6.2.2. Null Driver

The term “null driver” is given to any driver that accepts Vulkan API calls, but does not do anything with them. This is common for testing interactions with the driver without needing any working implementation backing it. Many uses cases such as creating CTS tests for new features, testing the Validation Layers, and more rely on the idea of a null driver.

Khronos provides the Mock ICD as one implementation of a null driver that works on various platforms.

6.2.3. Software Implementation

It is possible to create a Vulkan implementation that only runs on the CPU. This is useful if there is a need to test Vulkan that is hardware independent, but unlike the null driver, also outputs a valid result.

SwiftShader is an example of CPU-based implementation.

6.3. Ways of Checking for Vulkan

6.3.1. VIA (Vulkan Installation Analyzer)

Included in the Vulkan SDK is a utility to check the Vulkan installation on your computer. It is supported on Windows, Linux, and macOS. VIA can:

-

Determine the state of Vulkan components on your system

-

Validate that your Vulkan Loader and drivers are installed properly

-

Capture your system state in a form that can be used as an attachment when submitting bugs

View the SDK documentation on VIA for more information.

6.3.2. Hello Create Instance

A simple way to check for Vulkan support cross platform is to create a simple “Hello World” Vulkan application. The vkCreateInstance function is used to create a Vulkan Instance and is also the shortest way to write a valid Vulkan application.

The Vulkan SDK provides a minimal vkCreateInstance example 01-init_instance.cpp that can be used.

permalink:/Notes/004-3d-rendering/vulkan/chapters/versions.html layout: default ---

7. Versions

Vulkan works on a major, minor, patch versioning system. Currently, there are 3 minor version releases of Vulkan (1.0, 1.1, 1.2 and 1.3) which are backward compatible with each other. An application can use vkEnumerateInstanceVersion to check what version of a Vulkan instance is supported. There is also a white paper by LunarG on how to query and check for the supported version. While working across minor versions, there are some subtle things to be aware of.

7.1. Instance and Device

It is important to remember there is a difference between the instance-level version and device-level version. It is possible that the loader and implementations will support different versions.

The Querying Version Support section in the Vulkan Spec goes into details on how to query for supported versions at both the instance and device level.

7.2. Header

There is only one supported header for all major releases of Vulkan. This means that there is no such thing as “Vulkan 1.0 headers” as all headers for a minor and patch version are unified. This should not be confused with the ability to generate a 1.0 version of the Vulkan Spec, as the Vulkan Spec and header of the same patch version will match. An example would be that the generated 1.0.42 Vulkan Spec will match the 1.x.42 header.

It is highly recommended that developers try to keep up to date with the latest header files released. The Vulkan SDK comes in many versions which map to the header version it will have been packaged for.

7.3. Extensions

Between minor versions of Vulkan, some extensions get promoted to the core version. When targeting a newer minor version of Vulkan, an application will not need to enable the newly promoted extensions at the instance and device creation. However, if an application wants to keep backward compatibility, it will need to enable the extensions.

For a summary of what is new in each version, check out the Vulkan Release Summary

7.4. Structs and enums

Structs and enums are dependent on the header file being used and not the version of the instance or device queried. For example, the struct VkPhysicalDeviceFeatures2 used to be VkPhysicalDeviceFeatures2KHR before Vulkan 1.1 was released. Regardless of the 1.x version of Vulkan being used, an application should use VkPhysicalDeviceFeatures2 in its code as it matches the newest header version. For applications that did have VkPhysicalDeviceFeatures2KHR in the code, there is no need to worry as the Vulkan header also aliases any promoted structs and enums (typedef VkPhysicalDeviceFeatures2 VkPhysicalDeviceFeatures2KHR;).

The reason for using the newer naming is that the Vulkan Spec itself will only refer to VkPhysicalDeviceFeatures2 regardless of what version of the Vulkan Spec is generated. Using the newer naming makes it easier to quickly search for where the structure is used.

7.5. Functions

Since functions are used to interact with the loader and implementations, there needs to be a little more care when working between minor versions. As an example, let’s look at vkGetPhysicalDeviceFeatures2KHR which was promoted to core as vkGetPhysicalDeviceFeatures2 from Vulkan 1.0 to Vulkan 1.1. Looking at the Vulkan header both are declared.

typedef void (VKAPI_PTR *PFN_vkGetPhysicalDeviceFeatures2)(VkPhysicalDevice physicalDevice, VkPhysicalDeviceFeatures2* pFeatures);

// ...

typedef void (VKAPI_PTR *PFN_vkGetPhysicalDeviceFeatures2KHR)(VkPhysicalDevice physicalDevice, VkPhysicalDeviceFeatures2* pFeatures);The main difference is when calling vkGetInstanceProcAddr(instance, “vkGetPhysicalDeviceFeatures2”); a Vulkan 1.0 implementation may not be aware of vkGetPhysicalDeviceFeatures2 existence and vkGetInstanceProcAddr will return NULL. To be backward compatible with Vulkan 1.0 in this situation, the application should query for vkGetPhysicalDeviceFeatures2KHR as a 1.1 Vulkan implementation will likely have the function directly pointed to the vkGetPhysicalDeviceFeatures2 function pointer internally.

| Note | The |

7.6. Features

Between minor versions, it is possible that some feature bits are added, removed, made optional, or made mandatory. All details of features that have changed are described in the Core Revisions section.

The Feature Requirements section in the Vulkan Spec can be used to view the list of features that are required from implementations across minor versions.

7.7. Limits

Currently, all versions of Vulkan share the same minimum/maximum limit requirements, but any changes would be listed in the Limit Requirements section of the Vulkan Spec.

7.8. SPIR-V

Every minor version of Vulkan maps to a version of SPIR-V that must be supported.

-

Vulkan 1.0 supports SPIR-V 1.0

-

Vulkan 1.1 supports SPIR-V 1.3 and below

-

Vulkan 1.2 supports SPIR-V 1.5 and below

-

Vulkan 1.3 supports SPIR-V 1.6 and below

It is up to the application to make sure that the SPIR-V in VkShaderModule is of a valid version to the corresponding Vulkan version.

permalink:/Notes/004-3d-rendering/vulkan/chapters/vulkan_release_summary.html layout: default ---

8. Vulkan Release Summary

Each minor release version of Vulkan promoted a different set of extension to core. This means that it’s no longer necessary to enable an extensions to use it’s functionality if the application requests at least that Vulkan version (given that the version is supported by the implementation).

The following summary contains a list of the extensions added to the respective core versions and why they were added. This list is taken from the Vulkan spec, but links jump to the various spots in the Vulkan Guide

8.1. Vulkan 1.1

| Note |

Vulkan 1.1 was released on March 7, 2018

Besides the listed extensions below, Vulkan 1.1 introduced the subgroups, protected memory, and the ability to query the instance version.

8.2. Vulkan 1.2

| Note |

Vulkan 1.2 was released on January 15, 2020

8.3. Vulkan 1.3

| Note |

Vulkan 1.3 was released on January 25, 2022

permalink: /Notes/004-3d-rendering/vulkan/chapters/what_is_spirv.html layout: default ---

9. What is SPIR-V

| Note | Please read the SPIRV-Guide for more in detail information about SPIR-V |

SPIR-V is a binary intermediate representation for graphical-shader stages and compute kernels. With Vulkan, an application can still write their shaders in a high-level shading language such as GLSL or HLSL, but a SPIR-V binary is needed when using vkCreateShaderModule. Khronos has a very nice white paper about SPIR-V and its advantages, and a high-level description of the representation. There are also two great Khronos presentations from Vulkan DevDay 2016 here and here (video of both).

9.1. SPIR-V Interface and Capabilities

Vulkan has an entire section that defines how Vulkan interfaces with SPIR-V shaders. Most valid usages of interfacing with SPIR-V occur during pipeline creation when shaders are compiled together.

SPIR-V has many capabilities as it has other targets than just Vulkan. To see the supported capabilities Vulkan requires, one can reference the Appendix. Some extensions and features in Vulkan are just designed to check if some SPIR-V capabilities are supported or not.

9.2. Compilers

9.2.1. glslang

glslang is the Khronos reference front-end for GLSL, HLSL and ESSL, and sample SPIR-V generator. There is a standalone glslangValidator tool that is included that can be used to create SPIR-V from GLSL, HLSL and ESSL.

9.2.2. Shaderc

A collection of tools, libraries, and tests for Vulkan shader compilation hosted by Google. It contains glslc which wraps around core functionality in glslang and SPIRV-Tools. Shaderc also contains spvc which wraps around core functionality in SPIRV-Cross and SPIRV-Tools.

Shaderc builds both tools as a standalone command line tool (glslc) as well as a library to link to (libshaderc).

9.2.4. Clspv

Clspv is a prototype compiler for a subset of OpenCL C to SPIR-V to be used as Vulkan compute shaders.

9.3. Tools and Ecosystem

There is a rich ecosystem of tools to take advantage of SPIR-V. The Vulkan SDK gives an overview of all the SPIR-V tools that are built and packaged for developers.

9.3.1. SPIRV-Tools

The Khronos SPIRV-Tools project provides C and C++ APIs and a command line interface to work with SPIR-V modules. More information in the SPIRV-Guide.

9.3.2. SPIRV-Cross

The Khronos SPIRV-Cross project is a practical tool and library for performing reflection on SPIR-V and disassembling SPIR-V back to a desired high-level shading language. For more details, Hans Kristian, the main developer of SPIR-V Cross, has given two great presentations about what it takes to create a tool such as SPIR-V Cross from 2018 Vulkanised (video) and 2019 Vulkanised (video)

9.3.3. SPIRV-LLVM

The Khronos SPIRV-LLVM project is a LLVM framework with SPIR-V support. It’s intended to contain a bi-directional converter between LLVM and SPIR-V. It also serves as a foundation for LLVM-based front-end compilers targeting SPIR-V.

permalink:/Notes/004-3d-rendering/vulkan/chapters/portability_initiative.html layout: default ---

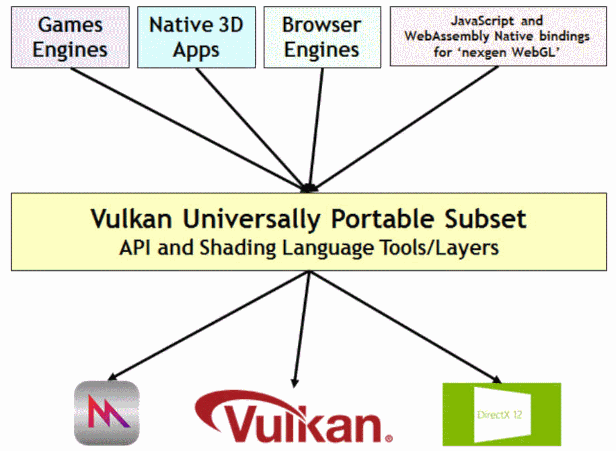

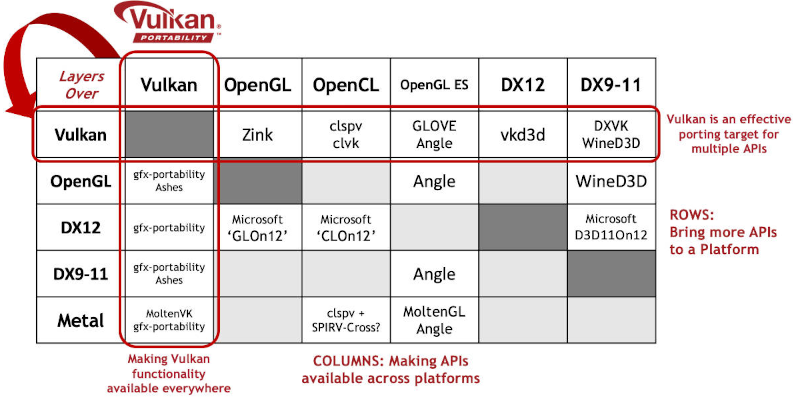

10. Portability Initiative

| Note | Notice Currently a provisional VK_KHR_portability_subset extension specification is available with the vulkan_beta.h headers. More information can found in the press release. |

The Vulkan Portability Initiative is an effort inside the Khronos Group to develop resources to define and evolve the subset of Vulkan capabilities that can be made universally available at native performance levels across all major platforms, including those not currently served by Vulkan native drivers. In a nutshell, this initiative is about making Vulkan viable on platforms that do not natively support the API (e.g MacOS and iOS).

10.1. Translation Layer

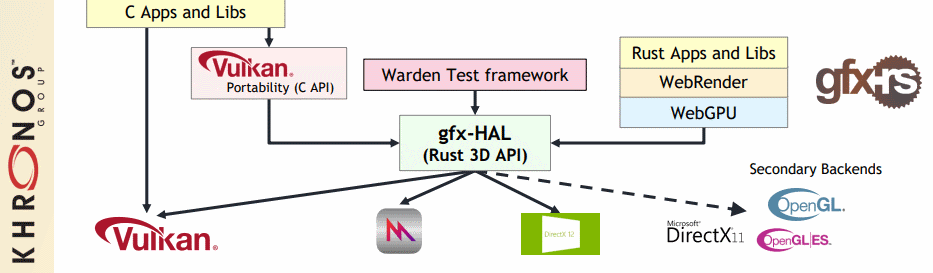

Layered implementations fight industry fragmentation by enabling more applications to run on more platforms, even in a fragmented industry API landscape. For example, the first row in the diagram below shows how Vulkan is being used as a porting target to bring additional APIs to platforms to enable more content without the need for additional kernel-level drivers. Layered API implementations have been used to successfully ship production applications on multiple platforms.

The columns in the figure show layering projects being used to make APIs available across additional platforms, even if no native drivers are available, giving application developers the deployment flexibility they need to develop with the graphics API of their choice and ship across multiple platforms. The first column in the diagram is the work of the Vulkan Portability Initiative, enabling layered implementations of Vulkan functionality across diverse platforms.

10.3. gfx-rs

Mozilla is currently helping drive gfx-rs portability to use gfx-hal as a way to interface with various other APIs.

permalink:/Notes/004-3d-rendering/vulkan/chapters/vulkan_cts.html layout: default ---

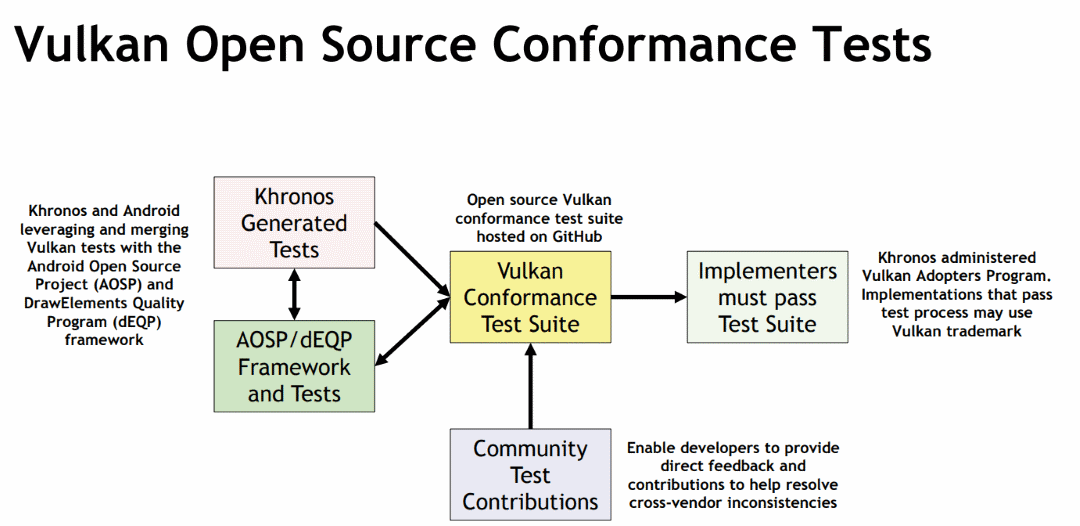

11. Vulkan CTS

The Vulkan Conformance Tests Suite (CTS) is a set of tests used to verify the conformance of an implementation. A conformant implementation shows that it has successfully passed CTS and it is a valid implementation of Vulkan. A list of conformant products is publicly available.

Any company with a conformant implementation may freely use the publicly released Vulkan specification to create a product. All implementations of the Vulkan API must be tested for conformance in the Khronos Vulkan Adopter Program before the Vulkan name or logo may be used in association with an implementation of the API.

The Vulkan CTS source code is freely available and anyone is free to create and add a new test to the Vulkan CTS as long as they follow the Contributing Wiki.

An application can query the version of CTS passed for an implementation using the VkConformanceVersion property via the VK_KHR_driver_properties extension (this was promoted to core in Vulkan 1.2).

permalink: /Notes/004-3d-rendering/vulkan/chapters/development_tools.html ---

12. Development Tools

The Vulkan ecosystem consists of many tools for development. This is not a full list and this is offered as a good starting point for many developers. Please continue to do your own research and search for other tools as the development ecosystem is much larger than what can reasonably fit on a single Markdown page.

Khronos hosts Vulkan Samples, a collection of code and tutorials that demonstrates API usage and explains the implementation of performance best practices.

LunarG is privately sponsored to develop and maintain Vulkan ecosystem components and is currently the curator for the Vulkan Loader and Vulkan Validation Layers Khronos Group repositories. In addition, LunarG delivers the Vulkan SDK and develops other key tools such as the Vulkan Configurator and GFXReconstruct.

12.1. Vulkan Layers

Layers are optional components that augment the Vulkan system. They can intercept, evaluate, and modify existing Vulkan functions on their way from the application down to the hardware. Layers are implemented as libraries that can be enabled and configured using Vulkan Configurator.

12.1.1. Khronos Layers

-

VK_LAYER_KHRONOS_validation, the Khronos Validation Layer. It is every developer’s first layer of defense when debugging their Vulkan application and this is the reason it is at the top of this list. Read the Validation Overview chapter for more details. The validation layer included multiple features:-

Synchronization Validation: Identify resource access conflicts due to missing or incorrect synchronization operations between actions (Draw, Copy, Dispatch, Blit) reading or writing the same regions of memory.

-

GPU-Assisted Validation: Instrument shader code to perform run-time checks for error conditions produced during shader execution.

-

Shader printf: Debug shader code by “printing” any values of interest to the debug callback or stdout.

-

Best Practices Warnings: Highlights potential performance issues, questionable usage patterns, common mistakes.

-

-

VK_LAYER_KHRONOS_synchronization2, the Khronos Synchronization2 layer. TheVK_LAYER_KHRONOS_synchronization2layer implements theVK_KHR_synchronization2extension. By default, it will disable itself if the underlying driver provides the extension.

12.1.2. Vulkan SDK layers

Besides the Khronos Layers, the Vulkan SDK included additional useful platform independent layers.

-

VK_LAYER_LUNARG_api_dump, a layer to log Vulkan API calls. The API dump layer prints API calls, parameters, and values to the identified output stream. -

VK_LAYER_LUNARG_gfxreconstruct, a layer for capturing frames created with Vulkan. This layer is a part of GFXReconstruct, a software for capturing and replaying Vulkan API calls. Full Android support is also available at https://github.com/LunarG/gfxreconstruct -

VK_LAYER_LUNARG_device_simulation, a layer to test Vulkan applications portability. The device simulation layer can be used to test whether a Vulkan application would run on a Vulkan device with lower capabilities. -

VK_LAYER_LUNARG_screenshot, a screenshot layer. Captures the rendered image of a Vulkan application to a viewable image. -

VK_LAYER_LUNARG_monitor, a framerate monitor layer. Display the Vulkan application FPS in the window title bar to give a hint about the performance.

12.1.3. Vulkan Third-party layers

There are also other publicly available layers that can be used to help in development.

-

VK_LAYER_ARM_mali_perf_doc, the ARM PerfDoc layer. Checks Vulkan applications for best practices on Arm Mali devices. -

VK_LAYER_IMG_powervr_perf_doc, the PowerVR PerfDoc layer. Checks Vulkan applications for best practices on Imagination Technologies PowerVR devices. -

VK_LAYER_adreno, the Vulkan Adreno Layer. Checks Vulkan applications for best practices on Qualcomm Adreno devices.

12.2. Debugging

Debugging something running on a GPU can be incredibly hard, luckily there are tools out there to help.

12.3. Profiling

With anything related to a GPU it is best to not assume and profile when possible. Here is a list of known profilers to aid in your development.

-

AMD Radeon GPU Profiler - Low-level performance analysis tool for AMD Radeon GPUs.

-

Arm Streamline Performance Analyzer - Visualize the performance of mobile games and applications for a broad range of devices, using Arm Mobile Studio.

-

Intel® GPA - Intel’s Graphics Performance Analyzers that supports capturing and analyzing multi-frame streams of Vulkan apps.

-

OCAT - The Open Capture and Analytics Tool (OCAT) provides an FPS overlay and performance measurement for D3D11, D3D12, and Vulkan.

-

Qualcomm Snapdragon Profiler - Profiling tool targeting Adreno GPU.

-

VKtracer - Cross-vendor and cross-platform profiler.

permalink:/Notes/004-3d-rendering/vulkan/chapters/validation_overview.html layout: default ---

13. Vulkan Validation Overview

| Note | The purpose of this section is to give a full overview of how Vulkan deals with valid usage of the API. |

13.1. Valid Usage (VU)

A VU is explicitly defined in the Vulkan Spec as:

| Note | set of conditions that must be met in order to achieve well-defined run-time behavior in an application. |

One of the main advantages of Vulkan, as an explicit API, is that the implementation (driver) doesn’t waste time checking for valid input. In OpenGL, the implementation would have to always check for valid usage which added noticeable overhead. There is no glGetError equivalent in Vulkan.

The valid usages will be listed in the spec after every function and structure. For example, if a VUID checks for an invalid VkImage at VkBindImageMemory then the valid usage in the spec is found under VkBindImageMemory. This is because the Validation Layers will only know about all the information at VkBindImageMemory during the execution of the application.

13.2. Undefined Behavior

When an application supplies invalid input, according to the valid usages in the spec, the result is undefined behavior. In this state, Vulkan makes no guarantees as anything is possible with undefined behavior.

VERY IMPORTANT: While undefined behavior might seem to work on one implementation, there is a good chance it will fail on another.

13.3. Valid Usage ID (VUID)

A VUID is an unique ID given to each valid usage. This allows a way to point to a valid usage in the spec easily.

Using VUID-vkBindImageMemory-memoryOffset-01046 as an example, it is as simple as adding the VUID to an anchor in the HMTL version of the spec (vkspec.html#VUID-vkBindImageMemory-memoryOffset-01046) and it will jump right to the VUID.

13.4. Khronos Validation Layer

Since Vulkan doesn’t do any error checking, it is very important, when developing, to enable the Validation Layers right away to help catch invalid behavior. Applications should also never ship the Validation Layers with their application as they noticeably reduce performance and are designed for the development phase.

| Note | The Khronos Validation Layer used to consist of multiple layers but now has been unified to a single |

13.4.1. Getting Validation Layers

The Validation Layers are constantly being updated and improved so it is always possible to grab the source and build it yourself. In case you want a prebuit version there are various options for all supported platforms:

-

Android - Binaries are released on GitHub with most up to date version. The NDK will also comes with the Validation Layers built and information on how to use them.

-

Linux - The Vulkan SDK comes with the Validation Layers built and instructions on how to use them on Linux.

-

MacOS - The Vulkan SDK comes with the Validation Layers built and instructions on how to use them on MacOS.

-

Windows - The Vulkan SDK comes with the Validation Layers built and instructions on how to use them on Windows.

13.5. Breaking Down a Validation Error Message

The Validation Layers attempt to supply as much useful information as possible when an error occurs. The following examples are to help show how to get the most information out of the Validation Layers

13.5.1. Example 1 - Implicit Valid Usage

This example shows a case where an implicit VU is triggered. There will not be a number at the end of the VUID.

Validation Error: [ VUID-vkBindBufferMemory-memory-parameter ] Object 0: handle =

0x20c8650, type = VK_OBJECT_TYPE_INSTANCE; | MessageID = 0xe9199965 | Invalid

VkDeviceMemory Object 0x60000000006. The Vulkan spec states: memory must be a valid

VkDeviceMemory handle (https://www.khronos.org/registry/vulkan/specs/1.1-extensions/

html/vkspec.html#VUID-vkBindBufferMemory-memory-parameter)-

The first thing to notice is the VUID is listed first in the message (

VUID-vkBindBufferMemory-memory-parameter)-

There is also a link at the end of the message to the VUID in the spec

-

-

The Vulkan spec states:is the quoted VUID from the spec. -

The

VK_OBJECT_TYPE_INSTANCEis the VkObjectType -

Invalid VkDeviceMemory Object 0x60000000006is the Dispatchable Handle to help show whichVkDeviceMemoryhandle was the cause of the error.

13.5.2. Example 2 - Explicit Valid Usage

This example shows an error where some VkImage is trying to be bound to 2 different VkDeviceMemory objects

Validation Error: [ VUID-vkBindImageMemory-image-01044 ] Object 0: handle =

0x90000000009, name = myTextureMemory, type = VK_OBJECT_TYPE_DEVICE_MEMORY; Object 1:

handle = 0x70000000007, type = VK_OBJECT_TYPE_IMAGE; Object 2: handle = 0x90000000006,

name = myIconMemory, type = VK_OBJECT_TYPE_DEVICE_MEMORY; | MessageID = 0x6f3eac96 |

In vkBindImageMemory(), attempting to bind VkDeviceMemory 0x90000000009[myTextureMemory]

to VkImage 0x70000000007[] which has already been bound to VkDeviceMemory

0x90000000006[myIconMemory]. The Vulkan spec states: image must not already be

backed by a memory object (https://www.khronos.org/registry/vulkan/specs/1.1-extensions/

html/vkspec.html#VUID-vkBindImageMemory-image-01044)-

Example 2 is about the same as Example 1 with the exception that the

namethat was attached to the object (name = myTextureMemory). This was done using the VK_EXT_debug_util extension (Sample of how to use the extension). Note that the old way of using VK_EXT_debug_report might be needed on legacy devices that don’t supportVK_EXT_debug_util. -

There were 3 objects involved in causing this error.

-

Object 0 is a

VkDeviceMemorynamedmyTextureMemory -

Object 1 is a

VkImagewith no name -

Object 2 is a

VkDeviceMemorynamedmyIconMemory

-

-

With the names it is easy to see “In

vkBindImageMemory(), themyTextureMemorymemory was attempting to bind to an image already been bound to themyIconMemorymemory”.

Each error message contains a uniform logging pattern. This allows information to be easily found in any error. The pattern is as followed:

-

Log status (ex.

Error:,Warning:, etc) -

The VUID

-

Array of objects involved

-

Index of array

-

Dispatch Handle value

-

Optional name

-

Object Type

-

-

Function or struct error occurred in

-

Message the layer has created to help describe the issue

-

The full Valid Usage from the spec

-

Link to the Valid Usage

13.6. Multiple VUIDs

| Note | The following is not ideal and is being looked into how to make it simpler |

Currently, the spec is designed to only show the VUIDs depending on the version and extensions the spec was built with. Simply put, additions of extensions and versions may alter the VU language enough (from new API items added) that a separate VUID is created.

An example of this from the Vulkan-Docs where the spec in generated from

* [[VUID-VkPipelineLayoutCreateInfo-pSetLayouts-00287]]

...What this creates is two very similar VUIDs

In this example, both VUIDs are very similar and the only difference is the fact VK_DESCRIPTOR_SET_LAYOUT_CREATE_UPDATE_AFTER_BIND_POOL_BIT is referenced in one and not this other. This is because the enum was added with the addition of VK_EXT_descriptor_indexing which is now part of Vulkan 1.2.

This means the 2 valid html links to the spec would look like

-

1.1/html/vkspec.html#VUID-VkPipelineLayoutCreateInfo-pSetLayouts-00287 -

1.2/html/vkspec.html#VUID-VkPipelineLayoutCreateInfo-descriptorType-03016

The Validation Layer uses the device properties of the application in order to decide which one to display. So in this case, if you are running on a Vulkan 1.2 implementation or a device that supports VK_EXT_descriptor_indexing it will display the VUID 03016.

13.7. Special Usage Tags

The Best Practices layer will produce warnings when an application tries to use any extension with special usage tags. An example of such an extension is VK_EXT_transform_feedback which is only designed for emulation layers. If an application’s intended usage corresponds to one of the special use cases, the following approach will allow you to ignore the warnings.

Ignoring Special Usage Warnings with VK_EXT_debug_report

VkBool32 DebugReportCallbackEXT(/* ... */ const char* pMessage /* ... */)

{

// If pMessage contains "specialuse-extension", then exit

if(strstr(pMessage, "specialuse-extension") != NULL) {

return VK_FALSE;

};

// Handle remaining validation messages

}Ignoring Special Usage Warnings with VK_EXT_debug_utils

VkBool32 DebugUtilsMessengerCallbackEXT(/* ... */ const VkDebugUtilsMessengerCallbackDataEXT* pCallbackData /* ... */)

{

// If pMessageIdName contains "specialuse-extension", then exit

if(strstr(pCallbackData->pMessageIdName, "specialuse-extension") != NULL) {

return VK_FALSE;

};

// Handle remaining validation messages

}permalink: /Notes/004-3d-rendering/vulkan/chapters/decoder_ring.html ---

14. Vulkan Decoder Ring

This section provides a mapping between the Vulkan term for a concept and the terminology used in other APIs. It is organized in alphabetical order by Vulkan term. If you are searching for the Vulkan equivalent of a concept used in an API you know, you can find the term you know in this list and then search the Vulkan specification for the corresponding Vulkan term.

| Note | Not everything will be a perfect 1:1 match, the goal is to give a rough idea where to start looking in the spec. |

| Vulkan | GL,GLES | DirectX | Metal |

|---|---|---|---|

buffer device address | GPU virtual address | ||

buffer view, texel buffer | texture buffer | typed buffer SRV, typed buffer UAV | texture buffer |

color attachments | color attachments | render target | color attachments or render target |

command buffer | part of context, display list, NV_command_list | command list | command buffer |

command pool | part of context | command allocator | command queue |

conditional rendering | conditional rendering | predication | |

depth/stencil attachment | depth Attachment and stencil Attachment | depth/stencil view | depth attachment and stencil attachment, depth render target and stencil render target |

descriptor | descriptor | argument | |

descriptor pool | descriptor heap | heap | |

descriptor set | descriptor table | argument buffer | |

descriptor set layout binding, push descriptor | root parameter | argument in shader parameter list | |

device group | implicit (E.g. SLI,CrossFire) | multi-adapter device | peer group |

device memory | heap | placement heap | |

event | split barrier | ||

fence | fence, sync |

| completed handler, |

fragment shader | fragment shader | pixel shader | fragment shader or fragment function |

fragment shader interlock | rasterizer order view (ROV) | raster order group | |

framebuffer | framebuffer object | collection of resources | |

heap | pool | ||

image | texture and renderbuffer | texture | texture |

image layout | resource state | ||

image tiling | image layout, swizzle | ||

image view | texture view | render target view, depth/stencil view, shader resource view, unordered access view | texture view |

interface matching ( | varying (removed in GLSL 4.20) | Matching semantics | |

invocation | invocation | thread, lane | thread, lane |

layer | slice | slice | |

logical device | context | device | device |

memory type | automatically managed, texture storage hint, buffer storage | heap type, CPU page property | storage mode, CPU cache mode |

multiview rendering | multiview rendering | view instancing | vertex amplification |

physical device | adapter, node | device | |

pipeline | state and program or program pipeline | pipeline state | pipeline state |

pipeline barrier, memory barrier | texture barrier, memory barrier | resource barrier | texture barrier, memory barrier |

pipeline layout | root signature | ||

queue | part of context | command queue | command queue |

semaphore | fence, sync | fence | fence, event |

shader module | shader object | resulting | shader library |

shading rate attachment | shading rate image | rasterization rate map | |

sparse block | sparse block | tile | sparse tile |

sparse image | sparse texture | reserved resource (D12), tiled resource (D11) | sparse texture |

storage buffer | shader storage buffer | raw or structured buffer UAV | buffer in |

subgroup | subgroup | wave | SIMD-group, quadgroup |

surface | HDC, GLXDrawable, EGLSurface | window | layer |

swapchain | Part of HDC, GLXDrawable, EGLSurface | swapchain | layer |

swapchain image | default framebuffer | drawable texture | |

task shader | amplification shader | ||

tessellation control shader | tessellation control shader | hull shader | tessellation compute kernel |

tessellation evaluation shader | tessellation evaluation shader | domain shader | post-tessellation vertex shader |

timeline semaphore | D3D12 fence | event | |

transform feedback | transform feedback | stream-out | |

uniform buffer | uniform buffer | constant buffer views (CBV) | buffer in |

workgroup | workgroup | threadgroup | threadgroup |

15. Using Vulkan

permalink: /Notes/004-3d-rendering/vulkan/chapters/loader.html ---

16. Loader

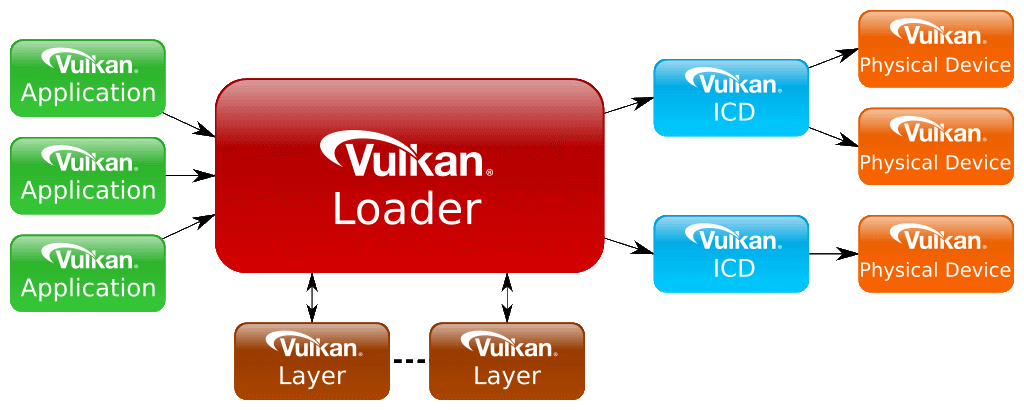

The loader is responsible for mapping an application to Vulkan layers and Vulkan installable client drivers (ICD).

Anyone can create their own Vulkan Loader, as long as they follow the Loader Interface. One can build the reference loader as well or grab a built version from the Vulkan SDK for selected platforms.

16.1. Linking Against the Loader

The Vulkan headers only provide the Vulkan function prototypes. When building a Vulkan application you have to link it to the loader or you will get errors about undefined references to the Vulkan functions. There are two ways of linking the loader, directly and indirectly, which should not be confused with “static and dynamic linking”.

-

Directly linking at compile time

-

This requires having a built Vulkan Loader (either as a static or dynamic library) that your build system can find.

-

Build systems (Visual Studio, CMake, etc) have documentation on how to link to the library. Try searching “(InsertBuildSystem) link to external library” online.

-

-

Indirectly linking at runtime

-

Using dynamic symbol lookup (via system calls such as

dlsymanddlopen) an application can initialize its own dispatch table. This allows an application to fail gracefully if the loader cannot be found. It also provides the fastest mechanism for the application to call Vulkan functions. -

Volk is an open source implementation of a meta-loader to help simplify this process.

-

16.2. Platform Variations

Each platform can set its own rules on how to enforce the Vulkan Loader.

16.2.1. Android

Android devices supporting Vulkan provide a Vulkan loader already built into the OS.

A vulkan_wrapper.c/h file is provided in the Android NDK for indirectly linking. This is needed, in part, because the Vulkan Loader can be different across different vendors and OEM devices.

16.2.2. Linux

The Vulkan SDK provides a pre-built loader for Linux.

The Getting Started page in the Vulkan SDK explains how the loader is found on Linux.

16.2.3. MacOS

The Vulkan SDK provides a pre-built loader for MacOS

The Getting Started page in the Vulkan SDK explains how the loader is found on MacOS.

16.2.4. Windows

The Vulkan SDK provides a pre-built loader for Windows.

The Getting Started page in the Vulkan SDK explains how the loader is found on Windows.

permalink: /Notes/004-3d-rendering/vulkan/chapters/layers.html ---

17. Layers

Layers are optional components that augment the Vulkan system. They can intercept, evaluate, and modify existing Vulkan functions on their way from the application down to the hardware. Layer properties can be queried from an application with vkEnumerateInstanceLayerProperties.

17.1. Using Layers

Layers are packaged as shared libraries that get dynamically loaded in by the loader and inserted between it and the application. The two things needed to use layers are the location of the binary files and which layers to enable. The layers to use can be either explicitly enabled by the application or implicitly enabled by telling the loader to use them. More details about implicit and explicit layers can be found in the Loader and Layer Interface.

The Vulkan SDK contains a layer configuration document that is very specific to how to discover and configure layers on each of the platforms.

17.2. Vulkan Configurator Tool

Developers on Windows, Linux, and macOS can use the Vulkan Configurator, vkconfig, to enable explicit layers and disable implicit layers as well as change layer settings from a graphical user interface. Please see the Vulkan Configurator documentation in the Vulkan SDK for more information on using the Vulkan Configurator.

17.3. Device Layers Deprecation

There used to be both instance layers and device layers, but device layers were deprecated early in Vulkan’s life and should be avoided.

17.4. Creating a Layer

Anyone can create a layer as long as it follows the loader to layer interface which is how the loader and layers agree to communicate with each other.

LunarG provides a framework for layer creation called the Layer Factory to help develop new layers (Video presentation). The layer factory hides the majority of the loader-layer interface, layer boilerplate, setup and initialization, and complexities of layer development. During application development, the ability to easily create a layer to aid in debugging your application can be useful. For more information, see the Vulkan Layer Factory documentation.

17.5. Platform Variations

The way to load a layer in implicitly varies between loader and platform.

17.5.1. Android

As of Android P (Android 9 / API level 28), if a device is in a debuggable state such that getprop ro.debuggable returns 1, then the loader will look in /data/local/debug/vulkan.

Starting in Android P (Android 9 / API level 28) implicit layers can be pushed using ADB if the application was built in debug mode.

There is no way other than the options above to use implicit layers.

17.5.2. Linux

The Vulkan SDK explains how to use implicit layers on Linux.

17.5.3. MacOS

The Vulkan SDK explains how to use implicit layers on MacOS.

17.5.4. Windows

The Vulkan SDK explains how to use implicit layers on Windows.

permalink:/Notes/004-3d-rendering/vulkan/chapters/querying_extensions_features.html layout: default ---

18. Querying Properties, Extensions, Features, Limits, and Formats

One of Vulkan’s main features is that is can be used to develop on multiple platforms and devices. To make this possible, an application is responsible for querying the information from each physical device and then basing decisions on this information.

The items that can be queried from a physical device

-

Properties

-

Features

-

Extensions

-

Limits

-

Formats

18.1. Properties

There are many other components in Vulkan that are labeled as properties. The term “properties” is an umbrella term for any read-only data that can be queried.

18.2. Extensions

| Note | Check out the Enabling Extensions chapter for more information. There is a Registry with all available extensions. |

There are many times when a set of new functionality is desired in Vulkan that doesn’t currently exist. Extensions have the ability to add new functionality. Extensions may define new Vulkan functions, enums, structs, or feature bits. While all of these extended items are found by default in the Vulkan Headers, it is undefined behavior to use extended Vulkan if the extensions are not enabled.

18.3. Features

| Note | Checkout the Enabling Features chapter for more information. |

Features describe functionality which is not supported on all implementations. Features can be queried and then enabled when creating the VkDevice. Besides the list of all features, some features are mandatory due to newer Vulkan versions or use of extensions.

A common technique is for an extension to expose a new struct that can be passed through pNext that adds more features to be queried.

18.4. Limits

Limits are implementation-dependent minimums, maximums, and other device characteristics that an application may need to be aware of. Besides the list of all limits, some limits also have minimum/maximum required values guaranteed from a Vulkan implementation.

18.5. Formats

Vulkan provides many VkFormat that have multiple VkFormatFeatureFlags each holding a various VkFormatFeatureFlagBits bitmasks that can be queried.

Checkout the Format chapter for more information.

18.6. Tools

There are a few tools to help with getting all the information in a quick and in a human readable format.

vulkaninfo is a command line utility for Windows, Linux, and macOS that enables you to see all the available items listed above about your GPU. Refer to the Vulkaninfo documentation in the Vulkan SDK.

The Vulkan Hardware Capability Viewer app developed by Sascha Willems, is an Android app to display all details for devices that support Vulkan.

permalink: /Notes/004-3d-rendering/vulkan/chapters/enabling_extensions.html ---

19. Enabling Extensions

This section goes over the logistics for enabling extensions.

19.1. Two types of extensions

There are two groups of extensions, instance extensions and device extensions. Simply put, instance extensions are tied to the entire VkInstance while device extensions are tied to only a single VkDevice instance.

This information is documented under the “Extension Type” section of each extension reference page. Example below:

19.2. Check for support

An application can query the physical device first to check if the extension is supported with vkEnumerateInstanceExtensionProperties or vkEnumerateDeviceExtensionProperties.

// Simple example

uint32_t count = 0;

vkEnumerateDeviceExtensionProperties(physicalDevice, nullptr, &count, nullptr);

std::vector<VkExtensionProperties> extensions(count);

vkEnumerateDeviceExtensionProperties(physicalDevice, nullptr, &count, extensions.data());

// Checking for support of VK_KHR_bind_memory2

for (uint32_t i = 0; i < count; i++) {

if (strcmp(VK_KHR_BIND_MEMORY_2_EXTENSION_NAME, extensions[i].extensionName) == 0) {

break; // VK_KHR_bind_memory2 is supported

}

}19.3. Enable the Extension

Even if the extension is supported by the implementation, it is undefined behavior to use the functionality of the extension unless it is enabled at VkInstance or VkDevice creation time.

Here is an example of what is needed to enable an extension such as VK_KHR_driver_properties.

// VK_KHR_get_physical_device_properties2 is required to use VK_KHR_driver_properties

// since it's an instance extension it needs to be enabled before at VkInstance creation time

std::vector<const char*> instance_extensions;

instance_extensions.push_back(VK_KHR_GET_PHYSICAL_DEVICE_PROPERTIES_2_EXTENSION_NAME);

VkInstanceCreateInfo instance_create_info = {};

instance_create_info.enabledExtensionCount = static_cast<uint32_t>(instance_extensions.size());

instance_create_info.ppEnabledExtensionNames = instance_extensions.data();

vkCreateInstance(&instance_create_info, nullptr, &myInstance));

// ...

std::vector<const char*> device_extensions;

device_extensions.push_back(VK_KHR_DRIVER_PROPERTIES_EXTENSION_NAME);

VkDeviceCreateInfo device_create_info = {};

device_create_info.enabledExtensionCount = static_cast<uint32_t>(device_extensions.size());

device_create_info.ppEnabledExtensionNames = device_extensions.data();

vkCreateDevice(physicalDevice, &device_create_info, nullptr, &myDevice);19.4. Check for feature bits

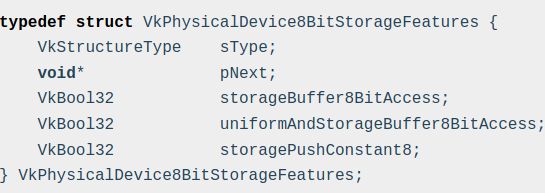

It is important to remember that extensions add the existence of functionality to the Vulkan spec, but this doesn’t mean that all features of an extension are available if the extension is supported. An example is an extension such as VK_KHR_8bit_storage, which has 3 features it exposes in VkPhysicalDevice8BitStorageFeatures.

This means after enabling the extension, an application will still need to query and enable the features needed from an extension.

19.5. Promotion Process

When minor versions of Vulkan are released, some extensions are promoted as defined in the spec. The goal of promotion is to have extended functionality, that the Vulkan Working Group has decided is widely supported, to be in the core Vulkan spec. More details about Vulkan versions can be found in the version chapter.

An example would be something such as VK_KHR_get_physical_device_properties2 which is used for most other extensions. In Vulkan 1.0, an application has to query for support of VK_KHR_get_physical_device_properties2 before being able to call a function such as vkGetPhysicalDeviceFeatures2KHR. Starting in Vulkan 1.1, the vkGetPhysicalDeviceFeatures2 function is guaranteed to be supported.

Another way to look at promotion is with the VK_KHR_8bit_storage as an example again. Since Vulkan 1.0 some features, such as textureCompressionASTC_LDR, are not required to be supported, but are available to query without needing to enable any extensions. Starting in Vulkan 1.2 when VK_KHR_8bit_storage was promoted to core, all the features in VkPhysicalDevice8BitStorageFeatures can now be found in VkPhysicalDeviceVulkan12Features.

19.5.1. Promotion Change of Behavior

It is important to realize there is a subtle difference for some extension that are promoted. The spec describes how promotion can involve minor changes such as in the extension’s “Feature advertisement/enablement”. To best describe the subtlety of this, VK_KHR_8bit_storage can be used as a use case.

The Vulkan spec describes the change for VK_KHR_8bit_storage for Vulkan 1.2 where it states:

If the VK_KHR_8bit_storage extension is not supported, support for the SPIR-V StorageBuffer8BitAccess capability in shader modules is optional.

"not supported" here refers to the fact that an implementation might support Vulkan 1.2+, but if an application queries vkEnumerateDeviceExtensionProperties it is possible that VK_KHR_8bit_storage will not be in the result.

-

If

VK_KHR_8bit_storageis found invkEnumerateDeviceExtensionPropertiesthen thestorageBuffer8BitAccessfeature is guaranteed to be supported. -

If

VK_KHR_8bit_storageis not found invkEnumerateDeviceExtensionPropertiesthen thestorageBuffer8BitAccessfeature might be supported and can be checked by queryingVkPhysicalDeviceVulkan12Features::storageBuffer8BitAccess.

The list of all feature changes to promoted extensions can be found in the version appendix of the spec.

permalink: /Notes/004-3d-rendering/vulkan/chapters/enabling_features.html ---

20. Enabling Features

This section goes over the logistics for enabling features.

20.1. Category of Features

All features in Vulkan can be categorized/found in 3 sections

-

Core 1.0 Features

-

These are the set of features that were available from the initial 1.0 release of Vulkan. The list of features can be found in VkPhysicalDeviceFeatures

-

-

Future Core Version Features

-

With Vulkan 1.1+ some new features were added to the core version of Vulkan. To keep the size of

VkPhysicalDeviceFeaturesbackward compatible, new structs were created to hold the grouping of features.

-

-

Extension Features

20.2. How to Enable the Features

All features must be enabled at VkDevice creation time inside the VkDeviceCreateInfo struct.

| Note | Don’t forget to query first with |

For the Core 1.0 Features, this is as simple as setting VkDeviceCreateInfo::pEnabledFeatures with the features desired to be turned on.

VkPhysicalDeviceFeatures features = {};

vkGetPhysicalDeviceFeatures(physical_device, &features);

// Logic if feature is not supported

if (features.robustBufferAccess == VK_FALSE) {

}

VkDeviceCreateInfo info = {};

info.pEnabledFeatures = &features;For all features, including the Core 1.0 Features, use VkPhysicalDeviceFeatures2 to pass into VkDeviceCreateInfo.pNext

VkPhysicalDeviceShaderDrawParametersFeatures ext_feature = {};

VkPhysicalDeviceFeatures2 physical_features2 = {};

physical_features2.pNext = &ext_feature;

vkGetPhysicalDeviceFeatures2(physical_device, &physical_features2);

// Logic if feature is not supported

if (ext_feature.shaderDrawParameters == VK_FALSE) {

}

VkDeviceCreateInfo info = {};

info.pNext = &physical_features2;The same works for the “Future Core Version Features” too.

VkPhysicalDeviceVulkan11Features features11 = {};

VkPhysicalDeviceFeatures2 physical_features2 = {};

physical_features2.pNext = &features11;

vkGetPhysicalDeviceFeatures2(physical_device, &physical_features2);

// Logic if feature is not supported

if (features11.shaderDrawParameters == VK_FALSE) {

}

VkDeviceCreateInfo info = {};

info.pNext = &physical_features2;permalink:/Notes/004-3d-rendering/vulkan/chapters/spirv_extensions.html layout: default ---

21. Using SPIR-V Extensions

SPIR-V is the shader representation used at vkCreateShaderModule time. Just like Vulkan, SPIR-V also has extensions and a capabilities system.

It is important to remember that SPIR-V is an intermediate language and not an API, it relies on an API, such as Vulkan, to expose what features are available to the application at runtime. This chapter aims to explain how Vulkan, as a SPIR-V client API, interacts with the SPIR-V extensions and capabilities.

21.1. SPIR-V Extension Example

For this example, the VK_KHR_8bit_storage and SPV_KHR_8bit_storage will be used to expose the UniformAndStorageBuffer8BitAccess capability. The following is what the SPIR-V disassembled looks like:

OpCapability Shader

OpCapability UniformAndStorageBuffer8BitAccess

OpExtension "SPV_KHR_8bit_storage"21.1.1. Steps for using SPIR-V features:

-

Make sure the SPIR-V extension and capability are available in Vulkan.

-

Check if the required Vulkan extension, features or version are supported.

-

If needed, enable the Vulkan extension and features.

-

If needed, see if there is a matching extension for the high-level shading language (ex. GLSL or HLSL) being used.

Breaking down each step in more detail:

Check if SPIR-V feature is supported

Depending on the shader feature there might only be a OpExtension or OpCapability that is needed. For this example, the UniformAndStorageBuffer8BitAccess is part of the SPV_KHR_8bit_storage extension.

To check if the SPIR-V extension is supported take a look at the Supported SPIR-V Extension Table in the Vulkan Spec.

Also, take a look at the Supported SPIR-V Capabilities Table in the Vulkan Spec.

| Note | while it says |

Luckily if you forget to check, the Vulkan Validation Layers has an auto-generated validation in place. Both the Validation Layers and the Vulkan Spec table are all based on the ./xml/vk.xml file.

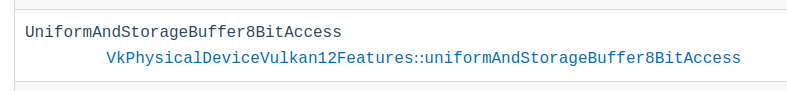

<spirvcapability name="UniformAndStorageBuffer8BitAccess">

<enable struct="VkPhysicalDeviceVulkan12Features" feature="uniformAndStorageBuffer8BitAccess" requires="VK_VERSION_1_2,VK_KHR_8bit_storage"/>

</spirvcapability>

<spirvextension name="SPV_KHR_8bit_storage">

<enable version="VK_VERSION_1_2"/>

<enable extension="VK_KHR_8bit_storage"/>

</spirvextension>Check for support then enable if needed

In this example, either VK_KHR_8bit_storage or a Vulkan 1.2 device is required.

If using a Vulkan 1.0 or 1.1 device, the VK_KHR_8bit_storage extension will need to be supported and enabled at device creation time.

Regardless of using the Vulkan extension or version, if required, an app still must make sure any matching Vulkan feature needed is supported and enabled at device creation time. Some SPIR-V extensions and capabilities don’t require a Vulkan feature, but this is all listed in the tables in the spec.

For this example, either the VkPhysicalDeviceVulkan12Features::uniformAndStorageBuffer8BitAccess or VkPhysicalDevice8BitStorageFeatures::uniformAndStorageBuffer8BitAccess feature must be supported and enabled.

Using high level shading language extensions

For this example, GLSL has a GL_EXT_shader_16bit_storage extension that includes the match GL_EXT_shader_8bit_storage extension in it.

Tools such as glslang and SPIRV-Tools will handle to make sure the matching OpExtension and OpCapability are used.

permalink: /Notes/004-3d-rendering/vulkan/chapters/formats.html ---

22. Formats

Vulkan formats are used to describe how memory is laid out. This chapter aims to give a high-level overview of the variations of formats in Vulkan and some logistical information for how to use them. All details are already well specified in both the Vulkan Spec format chapter and the Khronos Data Format Specification.

The most common use case for a VkFormat is when creating a VkImage. Because the VkFormats are well defined, they are also used when describing the memory layout for things such as a VkBufferView, vertex input attribute, mapping SPIR-V image formats, creating triangle geometry in a bottom-level acceleration structure, etc.

22.1. Feature Support

It is important to understand that "format support" is not a single binary value per format, but rather each format has a set of VkFormatFeatureFlagBits that each describes with features are supported for a format.

The supported formats may vary across implementations, but a minimum set of format features are guaranteed. An application can query for the supported format properties.

| Note | Both VK_KHR_get_physical_device_properties2 and VK_KHR_format_feature_flags2 expose another way to query for format features. |

22.1.1. Format Feature Query Example

In this example, the code will check if the VK_FORMAT_R8_UNORM format supports being sampled from a VkImage created with VK_IMAGE_TILING_LINEAR for VkImageCreateInfo::tiling. To do this, the code will query the linearTilingFeatures flags for VK_FORMAT_R8_UNORM to see if the VK_FORMAT_FEATURE_SAMPLED_IMAGE_BIT is supported by the implementation.

// Using core Vulkan 1.0

VkFormatProperties formatProperties;

vkGetPhysicalDeviceFormatProperties(physicalDevice, VK_FORMAT_R8_UNORM, &formatProperties);

if ((formatProperties.linearTilingFeatures & VK_FORMAT_FEATURE_SAMPLED_IMAGE_BIT) != 0) {

// supported

} else {

// not supported

}// Using core Vulkan 1.1 or VK_KHR_get_physical_device_properties2

VkFormatProperties2 formatProperties2;

formatProperties2.sType = VK_STRUCTURE_TYPE_FORMAT_PROPERTIES_2;

formatProperties2.pNext = nullptr; // used for possible extensions

vkGetPhysicalDeviceFormatProperties2(physicalDevice, VK_FORMAT_R8_UNORM, &formatProperties2);

if ((formatProperties2.formatProperties.linearTilingFeatures & VK_FORMAT_FEATURE_SAMPLED_IMAGE_BIT) != 0) {

// supported

} else {

// not supported

}// Using VK_KHR_format_feature_flags2

VkFormatProperties3KHR formatProperties3;

formatProperties2.sType = VK_STRUCTURE_TYPE_FORMAT_PROPERTIES_3_KHR;

formatProperties2.pNext = nullptr;

VkFormatProperties2 formatProperties2;

formatProperties2.sType = VK_STRUCTURE_TYPE_FORMAT_PROPERTIES_2;

formatProperties2.pNext = &formatProperties3;

vkGetPhysicalDeviceFormatProperties2(physicalDevice, VK_FORMAT_R8_UNORM, &formatProperties2);

if ((formatProperties3.linearTilingFeatures & VK_FORMAT_FEATURE_2_STORAGE_IMAGE_BIT_KHR) != 0) {

// supported

} else {

// not supported

}22.2. Variations of Formats

Formats come in many variations, most can be grouped by the name of the format. When dealing with images, the VkImageAspectFlagBits values are used to represent which part of the data is being accessed for operations such as clears and copies.

22.2.1. Color

Format with a R, G, B or A component and accessed with the VK_IMAGE_ASPECT_COLOR_BIT

22.2.2. Depth and Stencil

Formats with a D or S component. These formats are considered opaque and have special rules when it comes to copy to and from depth/stencil images.

Some formats have both a depth and stencil component and can be accessed separately with VK_IMAGE_ASPECT_DEPTH_BIT and VK_IMAGE_ASPECT_STENCIL_BIT.

| Note | VK_KHR_separate_depth_stencil_layouts and VK_EXT_separate_stencil_usage, which are both promoted to Vulkan 1.2, can be used to have finer control between the depth and stencil components. |

More information about depth format can also be found in the depth chapter.

22.2.3. Compressed

Compressed image formats representation of multiple pixels encoded interdependently within a region.

| Format | How to enable |

|---|---|

| |

| |

| |

22.2.4. Planar

VK_KHR_sampler_ycbcr_conversion and VK_EXT_ycbcr_2plane_444_formats add multi-planar formats to Vulkan. The planes can be accessed separately with VK_IMAGE_ASPECT_PLANE_0_BIT, VK_IMAGE_ASPECT_PLANE_1_BIT, and VK_IMAGE_ASPECT_PLANE_2_BIT.

22.2.5. Packed

Packed formats are for the purposes of address alignment. As an example, VK_FORMAT_A8B8G8R8_UNORM_PACK32 and VK_FORMAT_R8G8B8A8_UNORM might seem very similar, but when using the formula from the Vertex Input Extraction section of the spec

attribAddress = bufferBindingAddress + vertexOffset + attribDesc.offset;

For VK_FORMAT_R8G8B8A8_UNORM the attribAddress has to be a multiple of the component size (8 bits) while VK_FORMAT_A8B8G8R8_UNORM_PACK32 has to be a multiple of the packed size (32 bits).

22.2.6. External

Currently only supported with the VK_ANDROID_external_memory_android_hardware_buffer extension. This extension allows Android applications to import implementation-defined external formats to be used with a VkSamplerYcbcrConversion. There are many restrictions what are allowed with these external formats which are documented in the spec.

permalink:/Notes/004-3d-rendering/vulkan/chapters/queues.html layout: default ---

23. Queues

An application submits work to a VkQueue, normally in the form of VkCommandBuffer objects or sparse bindings.

Command buffers submitted to a VkQueue start in order, but are allowed to proceed independently after that and complete out of order.

Command buffers submitted to different queues are unordered relative to each other unless you explicitly synchronize them with a VkSemaphore.

You can only submit work to a VkQueue from one thread at a time, but different threads can submit work to a different VkQueue simultaneously.

How a VkQueue is mapped to the underlying hardware is implementation-defined. Some implementations will have multiple hardware queues and submitting work to multiple VkQueues will proceed independently and concurrently. Some implementations will do scheduling at a kernel driver level before submitting work to the hardware. There is no current way in Vulkan to expose the exact details how each VkQueue is mapped.

| Note | Not all applications will require or benefit from multiple queues. It is reasonable for an application to have a single “universal” graphics supported queue to submit all the work to the GPU. |

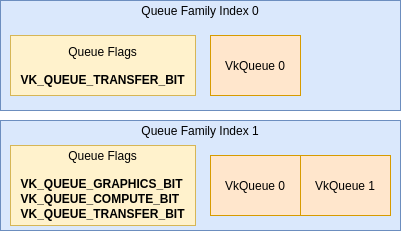

23.1. Queue Family

There are various types of operations a VkQueue can support. A “Queue Family” just describes a set of VkQueues that have common properties and support the same functionality, as advertised in VkQueueFamilyProperties.

The following are the queue operations found in VkQueueFlagBits:

-

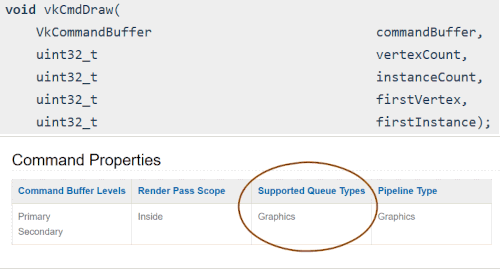

VK_QUEUE_GRAPHICS_BITused forvkCmdDraw*and graphic pipeline commands. -

VK_QUEUE_COMPUTE_BITused forvkCmdDispatch*andvkCmdTraceRays*and compute pipeline related commands. -

VK_QUEUE_TRANSFER_BITused for all transfer commands.-

VK_PIPELINE_STAGE_TRANSFER_BIT in the Spec describes “transfer commands”.

-

Queue Families with only

VK_QUEUE_TRANSFER_BITare usually for using DMA to asynchronously transfer data between host and device memory on discrete GPUs, so transfers can be done concurrently with independent graphics/compute operations. -

VK_QUEUE_GRAPHICS_BITandVK_QUEUE_COMPUTE_BITcan always implicitly acceptVK_QUEUE_TRANSFER_BITcommands.

-

-

VK_QUEUE_SPARSE_BINDING_BITused for binding sparse resources to memory withvkQueueBindSparse. -

VK_QUEUE_PROTECTED_BITused for protected memory. -

VK_QUEUE_VIDEO_DECODE_BIT_KHRandVK_QUEUE_VIDEO_ENCODE_BIT_KHRused with Vulkan Video.

23.1.1. Knowing which Queue Family is needed

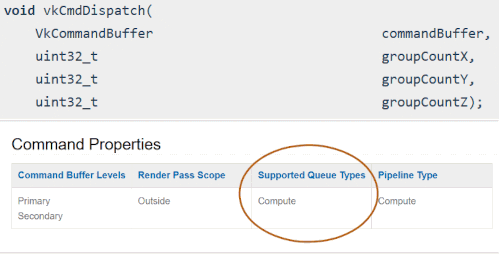

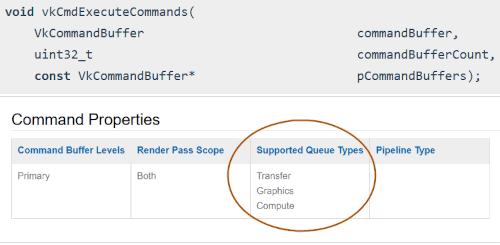

Each operation in the Vulkan Spec has a “Supported Queue Types” section generated from the vk.xml file. The following is 3 different examples of what it looks like in the Spec:

23.1.2. Querying for Queue Family

The following is the simplest logic needed if an application only wants a single graphics VkQueue

uint32_t count = 0;

vkGetPhysicalDeviceQueueFamilyProperties(physicalDevice, &count, nullptr);

std::vector<VkQueueFamilyProperties> properties(count);