查看模型结构

文本方式

print(model)

如whisper:

Whisper(

(encoder): AudioEncoder(

(conv1): Conv1d(80, 512, kernel_size=(3,), stride=(1,), padding=(1,))

(conv2): Conv1d(512, 512, kernel_size=(3,), stride=(2,), padding=(1,))

(blocks): ModuleList(

(0-5): 6 x ResidualAttentionBlock(

(attn): MultiHeadAttention(

(query): Linear(in_features=512, out_features=512, bias=True)

(key): Linear(in_features=512, out_features=512, bias=False)

(value): Linear(in_features=512, out_features=512, bias=True)

(out): Linear(in_features=512, out_features=512, bias=True)

)

(attn_ln): LayerNorm((512,), eps=1e-05, elementwise_affine=True)

(mlp): Sequential(

(0): Linear(in_features=512, out_features=2048, bias=True)

(1): GELU(approximate='none')

(2): Linear(in_features=2048, out_features=512, bias=True)

)

(mlp_ln): LayerNorm((512,), eps=1e-05, elementwise_affine=True)

)

)

(ln_post): LayerNorm((512,), eps=1e-05, elementwise_affine=True)

)

(decoder): TextDecoder(

(token_embedding): Embedding(51865, 512)

(blocks): ModuleList(

(0-5): 6 x ResidualAttentionBlock(

(attn): MultiHeadAttention(

(query): Linear(in_features=512, out_features=512, bias=True)

(key): Linear(in_features=512, out_features=512, bias=False)

(value): Linear(in_features=512, out_features=512, bias=True)

(out): Linear(in_features=512, out_features=512, bias=True)

)

(attn_ln): LayerNorm((512,), eps=1e-05, elementwise_affine=True)

(cross_attn): MultiHeadAttention(

(query): Linear(in_features=512, out_features=512, bias=True)

(key): Linear(in_features=512, out_features=512, bias=False)

(value): Linear(in_features=512, out_features=512, bias=True)

(out): Linear(in_features=512, out_features=512, bias=True)

)

(cross_attn_ln): LayerNorm((512,), eps=1e-05, elementwise_affine=True)

(mlp): Sequential(

(0): Linear(in_features=512, out_features=2048, bias=True)

(1): GELU(approximate='none')

(2): Linear(in_features=2048, out_features=512, bias=True)

)

(mlp_ln): LayerNorm((512,), eps=1e-05, elementwise_affine=True)

)

)

(ln): LayerNorm((512,), eps=1e-05, elementwise_affine=True)

)

)

vqgan_imagenet_f16_1024

参数量:89,623,492

VQModel(

(encoder): Encoder(

(conv_in): Conv2d(3, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(down): ModuleList(

(0-1): 2 x Module(

(block): ModuleList(

(0-1): 2 x ResnetBlock(

(norm1): GroupNorm(32, 128, eps=1e-06, affine=True)

(conv1): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(norm2): GroupNorm(32, 128, eps=1e-06, affine=True)

(dropout): Dropout(p=0.0, inplace=False)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

)

)

(attn): ModuleList()

(downsample): Downsample(

(conv): Conv2d(128, 128, kernel_size=(3, 3), stride=(2, 2))

)

)

(2): Module(

(block): ModuleList(

(0): ResnetBlock(

(norm1): GroupNorm(32, 128, eps=1e-06, affine=True)

(conv1): Conv2d(128, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(norm2): GroupNorm(32, 256, eps=1e-06, affine=True)

(dropout): Dropout(p=0.0, inplace=False)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(nin_shortcut): Conv2d(128, 256, kernel_size=(1, 1), stride=(1, 1))

)

(1): ResnetBlock(

(norm1): GroupNorm(32, 256, eps=1e-06, affine=True)

(conv1): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(norm2): GroupNorm(32, 256, eps=1e-06, affine=True)

(dropout): Dropout(p=0.0, inplace=False)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

)

)

(attn): ModuleList()

(downsample): Downsample(

(conv): Conv2d(256, 256, kernel_size=(3, 3), stride=(2, 2))

)

)

(3): Module(

(block): ModuleList(

(0-1): 2 x ResnetBlock(

(norm1): GroupNorm(32, 256, eps=1e-06, affine=True)

(conv1): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(norm2): GroupNorm(32, 256, eps=1e-06, affine=True)

(dropout): Dropout(p=0.0, inplace=False)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

)

)

(attn): ModuleList()

(downsample): Downsample(

(conv): Conv2d(256, 256, kernel_size=(3, 3), stride=(2, 2))

)

)

(4): Module(

(block): ModuleList(

(0): ResnetBlock(

(norm1): GroupNorm(32, 256, eps=1e-06, affine=True)

(conv1): Conv2d(256, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(norm2): GroupNorm(32, 512, eps=1e-06, affine=True)

(dropout): Dropout(p=0.0, inplace=False)

(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(nin_shortcut): Conv2d(256, 512, kernel_size=(1, 1), stride=(1, 1))

)

(1): ResnetBlock(

(norm1): GroupNorm(32, 512, eps=1e-06, affine=True)

(conv1): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(norm2): GroupNorm(32, 512, eps=1e-06, affine=True)

(dropout): Dropout(p=0.0, inplace=False)

(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

)

)

(attn): ModuleList(

(0-1): 2 x AttnBlock(

(norm): GroupNorm(32, 512, eps=1e-06, affine=True)

(q): Conv2d(512, 512, kernel_size=(1, 1), stride=(1, 1))

(k): Conv2d(512, 512, kernel_size=(1, 1), stride=(1, 1))

(v): Conv2d(512, 512, kernel_size=(1, 1), stride=(1, 1))

(proj_out): Conv2d(512, 512, kernel_size=(1, 1), stride=(1, 1))

)

)

)

)

(mid): Module(

(block_1): ResnetBlock(

(norm1): GroupNorm(32, 512, eps=1e-06, affine=True)

(conv1): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(norm2): GroupNorm(32, 512, eps=1e-06, affine=True)

(dropout): Dropout(p=0.0, inplace=False)

(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

)

(attn_1): AttnBlock(

(norm): GroupNorm(32, 512, eps=1e-06, affine=True)

(q): Conv2d(512, 512, kernel_size=(1, 1), stride=(1, 1))

(k): Conv2d(512, 512, kernel_size=(1, 1), stride=(1, 1))

(v): Conv2d(512, 512, kernel_size=(1, 1), stride=(1, 1))

(proj_out): Conv2d(512, 512, kernel_size=(1, 1), stride=(1, 1))

)

(block_2): ResnetBlock(

(norm1): GroupNorm(32, 512, eps=1e-06, affine=True)

(conv1): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(norm2): GroupNorm(32, 512, eps=1e-06, affine=True)

(dropout): Dropout(p=0.0, inplace=False)

(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

)

)

(norm_out): GroupNorm(32, 512, eps=1e-06, affine=True)

(conv_out): Conv2d(512, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

)

(decoder): Decoder(

(conv_in): Conv2d(256, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(mid): Module(

(block_1): ResnetBlock(

(norm1): GroupNorm(32, 512, eps=1e-06, affine=True)

(conv1): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(norm2): GroupNorm(32, 512, eps=1e-06, affine=True)

(dropout): Dropout(p=0.0, inplace=False)

(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

)

(attn_1): AttnBlock(

(norm): GroupNorm(32, 512, eps=1e-06, affine=True)

(q): Conv2d(512, 512, kernel_size=(1, 1), stride=(1, 1))

(k): Conv2d(512, 512, kernel_size=(1, 1), stride=(1, 1))

(v): Conv2d(512, 512, kernel_size=(1, 1), stride=(1, 1))

(proj_out): Conv2d(512, 512, kernel_size=(1, 1), stride=(1, 1))

)

(block_2): ResnetBlock(

(norm1): GroupNorm(32, 512, eps=1e-06, affine=True)

(conv1): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(norm2): GroupNorm(32, 512, eps=1e-06, affine=True)

(dropout): Dropout(p=0.0, inplace=False)

(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

)

)

(up): ModuleList(

(0): Module(

(block): ModuleList(

(0-2): 3 x ResnetBlock(

(norm1): GroupNorm(32, 128, eps=1e-06, affine=True)

(conv1): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(norm2): GroupNorm(32, 128, eps=1e-06, affine=True)

(dropout): Dropout(p=0.0, inplace=False)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

)

)

(attn): ModuleList()

)

(1): Module(

(block): ModuleList(

(0): ResnetBlock(

(norm1): GroupNorm(32, 256, eps=1e-06, affine=True)

(conv1): Conv2d(256, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(norm2): GroupNorm(32, 128, eps=1e-06, affine=True)

(dropout): Dropout(p=0.0, inplace=False)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(nin_shortcut): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1))

)

(1-2): 2 x ResnetBlock(

(norm1): GroupNorm(32, 128, eps=1e-06, affine=True)

(conv1): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(norm2): GroupNorm(32, 128, eps=1e-06, affine=True)

(dropout): Dropout(p=0.0, inplace=False)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

)

)

(attn): ModuleList()

(upsample): Upsample(

(conv): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

)

)

(2): Module(

(block): ModuleList(

(0-2): 3 x ResnetBlock(

(norm1): GroupNorm(32, 256, eps=1e-06, affine=True)

(conv1): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(norm2): GroupNorm(32, 256, eps=1e-06, affine=True)

(dropout): Dropout(p=0.0, inplace=False)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

)

)

(attn): ModuleList()

(upsample): Upsample(

(conv): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

)

)

(3): Module(

(block): ModuleList(

(0): ResnetBlock(

(norm1): GroupNorm(32, 512, eps=1e-06, affine=True)

(conv1): Conv2d(512, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(norm2): GroupNorm(32, 256, eps=1e-06, affine=True)

(dropout): Dropout(p=0.0, inplace=False)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(nin_shortcut): Conv2d(512, 256, kernel_size=(1, 1), stride=(1, 1))

)

(1-2): 2 x ResnetBlock(

(norm1): GroupNorm(32, 256, eps=1e-06, affine=True)

(conv1): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(norm2): GroupNorm(32, 256, eps=1e-06, affine=True)

(dropout): Dropout(p=0.0, inplace=False)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

)

)

(attn): ModuleList()

(upsample): Upsample(

(conv): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

)

)

(4): Module(

(block): ModuleList(

(0-2): 3 x ResnetBlock(

(norm1): GroupNorm(32, 512, eps=1e-06, affine=True)

(conv1): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(norm2): GroupNorm(32, 512, eps=1e-06, affine=True)

(dropout): Dropout(p=0.0, inplace=False)

(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

)

)

(attn): ModuleList(

(0-2): 3 x AttnBlock(

(norm): GroupNorm(32, 512, eps=1e-06, affine=True)

(q): Conv2d(512, 512, kernel_size=(1, 1), stride=(1, 1))

(k): Conv2d(512, 512, kernel_size=(1, 1), stride=(1, 1))

(v): Conv2d(512, 512, kernel_size=(1, 1), stride=(1, 1))

(proj_out): Conv2d(512, 512, kernel_size=(1, 1), stride=(1, 1))

)

)

(upsample): Upsample(

(conv): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

)

)

)

(norm_out): GroupNorm(32, 128, eps=1e-06, affine=True)

(conv_out): Conv2d(128, 3, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

)

(loss): VQLPIPSWithDiscriminator(

(perceptual_loss): LPIPS(

(scaling_layer): ScalingLayer()

(net): vgg16(

(slice1): Sequential(

(0): Conv2d(3, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): ReLU(inplace=True)

(2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(3): ReLU(inplace=True)

)

(slice2): Sequential(

(4): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(5): Conv2d(64, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(6): ReLU(inplace=True)

(7): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(8): ReLU(inplace=True)

)

(slice3): Sequential(

(9): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(10): Conv2d(128, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(11): ReLU(inplace=True)

(12): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(13): ReLU(inplace=True)

(14): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(15): ReLU(inplace=True)

)

(slice4): Sequential(

(16): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(17): Conv2d(256, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(18): ReLU(inplace=True)

(19): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(20): ReLU(inplace=True)

(21): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(22): ReLU(inplace=True)

)

(slice5): Sequential(

(23): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(24): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(25): ReLU(inplace=True)

(26): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(27): ReLU(inplace=True)

(28): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(29): ReLU(inplace=True)

)

)

(lin0): NetLinLayer(

(model): Sequential(

(0): Dropout(p=0.5, inplace=False)

(1): Conv2d(64, 1, kernel_size=(1, 1), stride=(1, 1), bias=False)

)

)

(lin1): NetLinLayer(

(model): Sequential(

(0): Dropout(p=0.5, inplace=False)

(1): Conv2d(128, 1, kernel_size=(1, 1), stride=(1, 1), bias=False)

)

)

(lin2): NetLinLayer(

(model): Sequential(

(0): Dropout(p=0.5, inplace=False)

(1): Conv2d(256, 1, kernel_size=(1, 1), stride=(1, 1), bias=False)

)

)

(lin3): NetLinLayer(

(model): Sequential(

(0): Dropout(p=0.5, inplace=False)

(1): Conv2d(512, 1, kernel_size=(1, 1), stride=(1, 1), bias=False)

)

)

(lin4): NetLinLayer(

(model): Sequential(

(0): Dropout(p=0.5, inplace=False)

(1): Conv2d(512, 1, kernel_size=(1, 1), stride=(1, 1), bias=False)

)

)

)

(discriminator): NLayerDiscriminator(

(main): Sequential(

(0): Conv2d(3, 64, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1))

(1): LeakyReLU(negative_slope=0.2, inplace=True)

(2): Conv2d(64, 128, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1), bias=False)

(3): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(4): LeakyReLU(negative_slope=0.2, inplace=True)

(5): Conv2d(128, 256, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1), bias=False)

(6): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(7): LeakyReLU(negative_slope=0.2, inplace=True)

(8): Conv2d(256, 512, kernel_size=(4, 4), stride=(1, 1), padding=(1, 1), bias=False)

(9): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(10): LeakyReLU(negative_slope=0.2, inplace=True)

(11): Conv2d(512, 1, kernel_size=(4, 4), stride=(1, 1), padding=(1, 1))

)

)

)

(quantize): VectorQuantizer2(

(embedding): Embedding(1024, 256)

)

(quant_conv): Conv2d(256, 256, kernel_size=(1, 1), stride=(1, 1))

(post_quant_conv): Conv2d(256, 256, kernel_size=(1, 1), stride=(1, 1))

)

vqgan_imagenet_f16_16384

参数量:91,453,380

VQModel(

(encoder): Encoder(

(conv_in): Conv2d(3, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(down): ModuleList(

(0-1): 2 x Module(

(block): ModuleList(

(0-1): 2 x ResnetBlock(

(norm1): GroupNorm(32, 128, eps=1e-06, affine=True)

(conv1): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(norm2): GroupNorm(32, 128, eps=1e-06, affine=True)

(dropout): Dropout(p=0.0, inplace=False)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

)

)

(attn): ModuleList()

(downsample): Downsample(

(conv): Conv2d(128, 128, kernel_size=(3, 3), stride=(2, 2))

)

)

(2): Module(

(block): ModuleList(

(0): ResnetBlock(

(norm1): GroupNorm(32, 128, eps=1e-06, affine=True)

(conv1): Conv2d(128, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(norm2): GroupNorm(32, 256, eps=1e-06, affine=True)

(dropout): Dropout(p=0.0, inplace=False)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(nin_shortcut): Conv2d(128, 256, kernel_size=(1, 1), stride=(1, 1))

)

(1): ResnetBlock(

(norm1): GroupNorm(32, 256, eps=1e-06, affine=True)

(conv1): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(norm2): GroupNorm(32, 256, eps=1e-06, affine=True)

(dropout): Dropout(p=0.0, inplace=False)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

)

)

(attn): ModuleList()

(downsample): Downsample(

(conv): Conv2d(256, 256, kernel_size=(3, 3), stride=(2, 2))

)

)

(3): Module(

(block): ModuleList(

(0-1): 2 x ResnetBlock(

(norm1): GroupNorm(32, 256, eps=1e-06, affine=True)

(conv1): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(norm2): GroupNorm(32, 256, eps=1e-06, affine=True)

(dropout): Dropout(p=0.0, inplace=False)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

)

)

(attn): ModuleList()

(downsample): Downsample(

(conv): Conv2d(256, 256, kernel_size=(3, 3), stride=(2, 2))

)

)

(4): Module(

(block): ModuleList(

(0): ResnetBlock(

(norm1): GroupNorm(32, 256, eps=1e-06, affine=True)

(conv1): Conv2d(256, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(norm2): GroupNorm(32, 512, eps=1e-06, affine=True)

(dropout): Dropout(p=0.0, inplace=False)

(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(nin_shortcut): Conv2d(256, 512, kernel_size=(1, 1), stride=(1, 1))

)

(1): ResnetBlock(

(norm1): GroupNorm(32, 512, eps=1e-06, affine=True)

(conv1): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(norm2): GroupNorm(32, 512, eps=1e-06, affine=True)

(dropout): Dropout(p=0.0, inplace=False)

(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

)

)

(attn): ModuleList(

(0-1): 2 x AttnBlock(

(norm): GroupNorm(32, 512, eps=1e-06, affine=True)

(q): Conv2d(512, 512, kernel_size=(1, 1), stride=(1, 1))

(k): Conv2d(512, 512, kernel_size=(1, 1), stride=(1, 1))

(v): Conv2d(512, 512, kernel_size=(1, 1), stride=(1, 1))

(proj_out): Conv2d(512, 512, kernel_size=(1, 1), stride=(1, 1))

)

)

)

)

(mid): Module(

(block_1): ResnetBlock(

(norm1): GroupNorm(32, 512, eps=1e-06, affine=True)

(conv1): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(norm2): GroupNorm(32, 512, eps=1e-06, affine=True)

(dropout): Dropout(p=0.0, inplace=False)

(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

)

(attn_1): AttnBlock(

(norm): GroupNorm(32, 512, eps=1e-06, affine=True)

(q): Conv2d(512, 512, kernel_size=(1, 1), stride=(1, 1))

(k): Conv2d(512, 512, kernel_size=(1, 1), stride=(1, 1))

(v): Conv2d(512, 512, kernel_size=(1, 1), stride=(1, 1))

(proj_out): Conv2d(512, 512, kernel_size=(1, 1), stride=(1, 1))

)

(block_2): ResnetBlock(

(norm1): GroupNorm(32, 512, eps=1e-06, affine=True)

(conv1): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(norm2): GroupNorm(32, 512, eps=1e-06, affine=True)

(dropout): Dropout(p=0.0, inplace=False)

(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

)

)

(norm_out): GroupNorm(32, 512, eps=1e-06, affine=True)

(conv_out): Conv2d(512, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

)

(decoder): Decoder(

(conv_in): Conv2d(256, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(mid): Module(

(block_1): ResnetBlock(

(norm1): GroupNorm(32, 512, eps=1e-06, affine=True)

(conv1): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(norm2): GroupNorm(32, 512, eps=1e-06, affine=True)

(dropout): Dropout(p=0.0, inplace=False)

(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

)

(attn_1): AttnBlock(

(norm): GroupNorm(32, 512, eps=1e-06, affine=True)

(q): Conv2d(512, 512, kernel_size=(1, 1), stride=(1, 1))

(k): Conv2d(512, 512, kernel_size=(1, 1), stride=(1, 1))

(v): Conv2d(512, 512, kernel_size=(1, 1), stride=(1, 1))

(proj_out): Conv2d(512, 512, kernel_size=(1, 1), stride=(1, 1))

)

(block_2): ResnetBlock(

(norm1): GroupNorm(32, 512, eps=1e-06, affine=True)

(conv1): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(norm2): GroupNorm(32, 512, eps=1e-06, affine=True)

(dropout): Dropout(p=0.0, inplace=False)

(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

)

)

(up): ModuleList(

(0): Module(

(block): ModuleList(

(0-2): 3 x ResnetBlock(

(norm1): GroupNorm(32, 128, eps=1e-06, affine=True)

(conv1): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(norm2): GroupNorm(32, 128, eps=1e-06, affine=True)

(dropout): Dropout(p=0.0, inplace=False)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

)

)

(attn): ModuleList()

)

(1): Module(

(block): ModuleList(

(0): ResnetBlock(

(norm1): GroupNorm(32, 256, eps=1e-06, affine=True)

(conv1): Conv2d(256, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(norm2): GroupNorm(32, 128, eps=1e-06, affine=True)

(dropout): Dropout(p=0.0, inplace=False)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(nin_shortcut): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1))

)

(1-2): 2 x ResnetBlock(

(norm1): GroupNorm(32, 128, eps=1e-06, affine=True)

(conv1): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(norm2): GroupNorm(32, 128, eps=1e-06, affine=True)

(dropout): Dropout(p=0.0, inplace=False)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

)

)

(attn): ModuleList()

(upsample): Upsample(

(conv): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

)

)

(2): Module(

(block): ModuleList(

(0-2): 3 x ResnetBlock(

(norm1): GroupNorm(32, 256, eps=1e-06, affine=True)

(conv1): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(norm2): GroupNorm(32, 256, eps=1e-06, affine=True)

(dropout): Dropout(p=0.0, inplace=False)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

)

)

(attn): ModuleList()

(upsample): Upsample(

(conv): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

)

)

(3): Module(

(block): ModuleList(

(0): ResnetBlock(

(norm1): GroupNorm(32, 512, eps=1e-06, affine=True)

(conv1): Conv2d(512, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(norm2): GroupNorm(32, 256, eps=1e-06, affine=True)

(dropout): Dropout(p=0.0, inplace=False)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(nin_shortcut): Conv2d(512, 256, kernel_size=(1, 1), stride=(1, 1))

)

(1-2): 2 x ResnetBlock(

(norm1): GroupNorm(32, 256, eps=1e-06, affine=True)

(conv1): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(norm2): GroupNorm(32, 256, eps=1e-06, affine=True)

(dropout): Dropout(p=0.0, inplace=False)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

)

)

(attn): ModuleList()

(upsample): Upsample(

(conv): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

)

)

(4): Module(

(block): ModuleList(

(0-2): 3 x ResnetBlock(

(norm1): GroupNorm(32, 512, eps=1e-06, affine=True)

(conv1): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(norm2): GroupNorm(32, 512, eps=1e-06, affine=True)

(dropout): Dropout(p=0.0, inplace=False)

(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

)

)

(attn): ModuleList(

(0-2): 3 x AttnBlock(

(norm): GroupNorm(32, 512, eps=1e-06, affine=True)

(q): Conv2d(512, 512, kernel_size=(1, 1), stride=(1, 1))

(k): Conv2d(512, 512, kernel_size=(1, 1), stride=(1, 1))

(v): Conv2d(512, 512, kernel_size=(1, 1), stride=(1, 1))

(proj_out): Conv2d(512, 512, kernel_size=(1, 1), stride=(1, 1))

)

)

(upsample): Upsample(

(conv): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

)

)

)

(norm_out): GroupNorm(32, 128, eps=1e-06, affine=True)

(conv_out): Conv2d(128, 3, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

)

(loss): VQLPIPSWithDiscriminator(

(perceptual_loss): LPIPS(

(scaling_layer): ScalingLayer()

(net): vgg16(

(slice1): Sequential(

(0): Conv2d(3, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): ReLU(inplace=True)

(2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(3): ReLU(inplace=True)

)

(slice2): Sequential(

(4): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(5): Conv2d(64, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(6): ReLU(inplace=True)

(7): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(8): ReLU(inplace=True)

)

(slice3): Sequential(

(9): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(10): Conv2d(128, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(11): ReLU(inplace=True)

(12): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(13): ReLU(inplace=True)

(14): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(15): ReLU(inplace=True)

)

(slice4): Sequential(

(16): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(17): Conv2d(256, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(18): ReLU(inplace=True)

(19): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(20): ReLU(inplace=True)

(21): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(22): ReLU(inplace=True)

)

(slice5): Sequential(

(23): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(24): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(25): ReLU(inplace=True)

(26): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(27): ReLU(inplace=True)

(28): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(29): ReLU(inplace=True)

)

)

(lin0): NetLinLayer(

(model): Sequential(

(0): Dropout(p=0.5, inplace=False)

(1): Conv2d(64, 1, kernel_size=(1, 1), stride=(1, 1), bias=False)

)

)

(lin1): NetLinLayer(

(model): Sequential(

(0): Dropout(p=0.5, inplace=False)

(1): Conv2d(128, 1, kernel_size=(1, 1), stride=(1, 1), bias=False)

)

)

(lin2): NetLinLayer(

(model): Sequential(

(0): Dropout(p=0.5, inplace=False)

(1): Conv2d(256, 1, kernel_size=(1, 1), stride=(1, 1), bias=False)

)

)

(lin3): NetLinLayer(

(model): Sequential(

(0): Dropout(p=0.5, inplace=False)

(1): Conv2d(512, 1, kernel_size=(1, 1), stride=(1, 1), bias=False)

)

)

(lin4): NetLinLayer(

(model): Sequential(

(0): Dropout(p=0.5, inplace=False)

(1): Conv2d(512, 1, kernel_size=(1, 1), stride=(1, 1), bias=False)

)

)

)

(discriminator): NLayerDiscriminator(

(main): Sequential(

(0): Conv2d(3, 64, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1))

(1): LeakyReLU(negative_slope=0.2, inplace=True)

(2): Conv2d(64, 128, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1), bias=False)

(3): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(4): LeakyReLU(negative_slope=0.2, inplace=True)

(5): Conv2d(128, 256, kernel_size=(4, 4), stride=(1, 1), padding=(1, 1), bias=False)

(6): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(7): LeakyReLU(negative_slope=0.2, inplace=True)

(8): Conv2d(256, 1, kernel_size=(4, 4), stride=(1, 1), padding=(1, 1))

)

)

)

(quantize): VectorQuantizer2(

(embedding): Embedding(16384, 256)

)

(quant_conv): Conv2d(256, 256, kernel_size=(1, 1), stride=(1, 1))

(post_quant_conv): Conv2d(256, 256, kernel_size=(1, 1), stride=(1, 1))

)

dalle_encoder

参数量:53,786,240

Encoder(

(blocks): Sequential(

(input): Conv2d(n_in=3, n_out=256, kw=7, use_float16=True, device=device(type='cpu'), requires_grad=False)

(group_1): Sequential(

(block_1): EncoderBlock(

(id_path): Identity()

(res_path): Sequential(

(relu_1): ReLU()

(conv_1): Conv2d(n_in=256, n_out=64, kw=3, use_float16=True, device=device(type='cpu'), requires_grad=False)

(relu_2): ReLU()

(conv_2): Conv2d(n_in=64, n_out=64, kw=3, use_float16=True, device=device(type='cpu'), requires_grad=False)

(relu_3): ReLU()

(conv_3): Conv2d(n_in=64, n_out=64, kw=3, use_float16=True, device=device(type='cpu'), requires_grad=False)

(relu_4): ReLU()

(conv_4): Conv2d(n_in=64, n_out=256, kw=1, use_float16=True, device=device(type='cpu'), requires_grad=False)

)

)

(block_2): EncoderBlock(

(id_path): Identity()

(res_path): Sequential(

(relu_1): ReLU()

(conv_1): Conv2d(n_in=256, n_out=64, kw=3, use_float16=True, device=device(type='cpu'), requires_grad=False)

(relu_2): ReLU()

(conv_2): Conv2d(n_in=64, n_out=64, kw=3, use_float16=True, device=device(type='cpu'), requires_grad=False)

(relu_3): ReLU()

(conv_3): Conv2d(n_in=64, n_out=64, kw=3, use_float16=True, device=device(type='cpu'), requires_grad=False)

(relu_4): ReLU()

(conv_4): Conv2d(n_in=64, n_out=256, kw=1, use_float16=True, device=device(type='cpu'), requires_grad=False)

)

)

(pool): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(group_2): Sequential(

(block_1): EncoderBlock(

(id_path): Conv2d(n_in=256, n_out=512, kw=1, use_float16=True, device=device(type='cpu'), requires_grad=False)

(res_path): Sequential(

(relu_1): ReLU()

(conv_1): Conv2d(n_in=256, n_out=128, kw=3, use_float16=True, device=device(type='cpu'), requires_grad=False)

(relu_2): ReLU()

(conv_2): Conv2d(n_in=128, n_out=128, kw=3, use_float16=True, device=device(type='cpu'), requires_grad=False)

(relu_3): ReLU()

(conv_3): Conv2d(n_in=128, n_out=128, kw=3, use_float16=True, device=device(type='cpu'), requires_grad=False)

(relu_4): ReLU()

(conv_4): Conv2d(n_in=128, n_out=512, kw=1, use_float16=True, device=device(type='cpu'), requires_grad=False)

)

)

(block_2): EncoderBlock(

(id_path): Identity()

(res_path): Sequential(

(relu_1): ReLU()

(conv_1): Conv2d(n_in=512, n_out=128, kw=3, use_float16=True, device=device(type='cpu'), requires_grad=False)

(relu_2): ReLU()

(conv_2): Conv2d(n_in=128, n_out=128, kw=3, use_float16=True, device=device(type='cpu'), requires_grad=False)

(relu_3): ReLU()

(conv_3): Conv2d(n_in=128, n_out=128, kw=3, use_float16=True, device=device(type='cpu'), requires_grad=False)

(relu_4): ReLU()

(conv_4): Conv2d(n_in=128, n_out=512, kw=1, use_float16=True, device=device(type='cpu'), requires_grad=False)

)

)

(pool): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(group_3): Sequential(

(block_1): EncoderBlock(

(id_path): Conv2d(n_in=512, n_out=1024, kw=1, use_float16=True, device=device(type='cpu'), requires_grad=False)

(res_path): Sequential(

(relu_1): ReLU()

(conv_1): Conv2d(n_in=512, n_out=256, kw=3, use_float16=True, device=device(type='cpu'), requires_grad=False)

(relu_2): ReLU()

(conv_2): Conv2d(n_in=256, n_out=256, kw=3, use_float16=True, device=device(type='cpu'), requires_grad=False)

(relu_3): ReLU()

(conv_3): Conv2d(n_in=256, n_out=256, kw=3, use_float16=True, device=device(type='cpu'), requires_grad=False)

(relu_4): ReLU()

(conv_4): Conv2d(n_in=256, n_out=1024, kw=1, use_float16=True, device=device(type='cpu'), requires_grad=False)

)

)

(block_2): EncoderBlock(

(id_path): Identity()

(res_path): Sequential(

(relu_1): ReLU()

(conv_1): Conv2d(n_in=1024, n_out=256, kw=3, use_float16=True, device=device(type='cpu'), requires_grad=False)

(relu_2): ReLU()

(conv_2): Conv2d(n_in=256, n_out=256, kw=3, use_float16=True, device=device(type='cpu'), requires_grad=False)

(relu_3): ReLU()

(conv_3): Conv2d(n_in=256, n_out=256, kw=3, use_float16=True, device=device(type='cpu'), requires_grad=False)

(relu_4): ReLU()

(conv_4): Conv2d(n_in=256, n_out=1024, kw=1, use_float16=True, device=device(type='cpu'), requires_grad=False)

)

)

(pool): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(group_4): Sequential(

(block_1): EncoderBlock(

(id_path): Conv2d(n_in=1024, n_out=2048, kw=1, use_float16=True, device=device(type='cpu'), requires_grad=False)

(res_path): Sequential(

(relu_1): ReLU()

(conv_1): Conv2d(n_in=1024, n_out=512, kw=3, use_float16=True, device=device(type='cpu'), requires_grad=False)

(relu_2): ReLU()

(conv_2): Conv2d(n_in=512, n_out=512, kw=3, use_float16=True, device=device(type='cpu'), requires_grad=False)

(relu_3): ReLU()

(conv_3): Conv2d(n_in=512, n_out=512, kw=3, use_float16=True, device=device(type='cpu'), requires_grad=False)

(relu_4): ReLU()

(conv_4): Conv2d(n_in=512, n_out=2048, kw=1, use_float16=True, device=device(type='cpu'), requires_grad=False)

)

)

(block_2): EncoderBlock(

(id_path): Identity()

(res_path): Sequential(

(relu_1): ReLU()

(conv_1): Conv2d(n_in=2048, n_out=512, kw=3, use_float16=True, device=device(type='cpu'), requires_grad=False)

(relu_2): ReLU()

(conv_2): Conv2d(n_in=512, n_out=512, kw=3, use_float16=True, device=device(type='cpu'), requires_grad=False)

(relu_3): ReLU()

(conv_3): Conv2d(n_in=512, n_out=512, kw=3, use_float16=True, device=device(type='cpu'), requires_grad=False)

(relu_4): ReLU()

(conv_4): Conv2d(n_in=512, n_out=2048, kw=1, use_float16=True, device=device(type='cpu'), requires_grad=False)

)

)

)

(output): Sequential(

(relu): ReLU()

(conv): Conv2d(n_in=2048, n_out=8192, kw=1, use_float16=False, device=device(type='cpu'), requires_grad=False)

)

)

)

decoder_dalle

参数量:43,829,766

Decoder(

(blocks): Sequential(

(input): Conv2d(n_in=8192, n_out=128, kw=1, use_float16=False, device=device(type='cpu'), requires_grad=False)

(group_1): Sequential(

(block_1): DecoderBlock(

(id_path): Conv2d(n_in=128, n_out=2048, kw=1, use_float16=True, device=device(type='cpu'), requires_grad=False)

(res_path): Sequential(

(relu_1): ReLU()

(conv_1): Conv2d(n_in=128, n_out=512, kw=1, use_float16=True, device=device(type='cpu'), requires_grad=False)

(relu_2): ReLU()

(conv_2): Conv2d(n_in=512, n_out=512, kw=3, use_float16=True, device=device(type='cpu'), requires_grad=False)

(relu_3): ReLU()

(conv_3): Conv2d(n_in=512, n_out=512, kw=3, use_float16=True, device=device(type='cpu'), requires_grad=False)

(relu_4): ReLU()

(conv_4): Conv2d(n_in=512, n_out=2048, kw=3, use_float16=True, device=device(type='cpu'), requires_grad=False)

)

)

(block_2): DecoderBlock(

(id_path): Identity()

(res_path): Sequential(

(relu_1): ReLU()

(conv_1): Conv2d(n_in=2048, n_out=512, kw=1, use_float16=True, device=device(type='cpu'), requires_grad=False)

(relu_2): ReLU()

(conv_2): Conv2d(n_in=512, n_out=512, kw=3, use_float16=True, device=device(type='cpu'), requires_grad=False)

(relu_3): ReLU()

(conv_3): Conv2d(n_in=512, n_out=512, kw=3, use_float16=True, device=device(type='cpu'), requires_grad=False)

(relu_4): ReLU()

(conv_4): Conv2d(n_in=512, n_out=2048, kw=3, use_float16=True, device=device(type='cpu'), requires_grad=False)

)

)

(upsample): Upsample(scale_factor=2.0, mode='nearest')

)

(group_2): Sequential(

(block_1): DecoderBlock(

(id_path): Conv2d(n_in=2048, n_out=1024, kw=1, use_float16=True, device=device(type='cpu'), requires_grad=False)

(res_path): Sequential(

(relu_1): ReLU()

(conv_1): Conv2d(n_in=2048, n_out=256, kw=1, use_float16=True, device=device(type='cpu'), requires_grad=False)

(relu_2): ReLU()

(conv_2): Conv2d(n_in=256, n_out=256, kw=3, use_float16=True, device=device(type='cpu'), requires_grad=False)

(relu_3): ReLU()

(conv_3): Conv2d(n_in=256, n_out=256, kw=3, use_float16=True, device=device(type='cpu'), requires_grad=False)

(relu_4): ReLU()

(conv_4): Conv2d(n_in=256, n_out=1024, kw=3, use_float16=True, device=device(type='cpu'), requires_grad=False)

)

)

(block_2): DecoderBlock(

(id_path): Identity()

(res_path): Sequential(

(relu_1): ReLU()

(conv_1): Conv2d(n_in=1024, n_out=256, kw=1, use_float16=True, device=device(type='cpu'), requires_grad=False)

(relu_2): ReLU()

(conv_2): Conv2d(n_in=256, n_out=256, kw=3, use_float16=True, device=device(type='cpu'), requires_grad=False)

(relu_3): ReLU()

(conv_3): Conv2d(n_in=256, n_out=256, kw=3, use_float16=True, device=device(type='cpu'), requires_grad=False)

(relu_4): ReLU()

(conv_4): Conv2d(n_in=256, n_out=1024, kw=3, use_float16=True, device=device(type='cpu'), requires_grad=False)

)

)

(upsample): Upsample(scale_factor=2.0, mode='nearest')

)

(group_3): Sequential(

(block_1): DecoderBlock(

(id_path): Conv2d(n_in=1024, n_out=512, kw=1, use_float16=True, device=device(type='cpu'), requires_grad=False)

(res_path): Sequential(

(relu_1): ReLU()

(conv_1): Conv2d(n_in=1024, n_out=128, kw=1, use_float16=True, device=device(type='cpu'), requires_grad=False)

(relu_2): ReLU()

(conv_2): Conv2d(n_in=128, n_out=128, kw=3, use_float16=True, device=device(type='cpu'), requires_grad=False)

(relu_3): ReLU()

(conv_3): Conv2d(n_in=128, n_out=128, kw=3, use_float16=True, device=device(type='cpu'), requires_grad=False)

(relu_4): ReLU()

(conv_4): Conv2d(n_in=128, n_out=512, kw=3, use_float16=True, device=device(type='cpu'), requires_grad=False)

)

)

(block_2): DecoderBlock(

(id_path): Identity()

(res_path): Sequential(

(relu_1): ReLU()

(conv_1): Conv2d(n_in=512, n_out=128, kw=1, use_float16=True, device=device(type='cpu'), requires_grad=False)

(relu_2): ReLU()

(conv_2): Conv2d(n_in=128, n_out=128, kw=3, use_float16=True, device=device(type='cpu'), requires_grad=False)

(relu_3): ReLU()

(conv_3): Conv2d(n_in=128, n_out=128, kw=3, use_float16=True, device=device(type='cpu'), requires_grad=False)

(relu_4): ReLU()

(conv_4): Conv2d(n_in=128, n_out=512, kw=3, use_float16=True, device=device(type='cpu'), requires_grad=False)

)

)

(upsample): Upsample(scale_factor=2.0, mode='nearest')

)

(group_4): Sequential(

(block_1): DecoderBlock(

(id_path): Conv2d(n_in=512, n_out=256, kw=1, use_float16=True, device=device(type='cpu'), requires_grad=False)

(res_path): Sequential(

(relu_1): ReLU()

(conv_1): Conv2d(n_in=512, n_out=64, kw=1, use_float16=True, device=device(type='cpu'), requires_grad=False)

(relu_2): ReLU()

(conv_2): Conv2d(n_in=64, n_out=64, kw=3, use_float16=True, device=device(type='cpu'), requires_grad=False)

(relu_3): ReLU()

(conv_3): Conv2d(n_in=64, n_out=64, kw=3, use_float16=True, device=device(type='cpu'), requires_grad=False)

(relu_4): ReLU()

(conv_4): Conv2d(n_in=64, n_out=256, kw=3, use_float16=True, device=device(type='cpu'), requires_grad=False)

)

)

(block_2): DecoderBlock(

(id_path): Identity()

(res_path): Sequential(

(relu_1): ReLU()

(conv_1): Conv2d(n_in=256, n_out=64, kw=1, use_float16=True, device=device(type='cpu'), requires_grad=False)

(relu_2): ReLU()

(conv_2): Conv2d(n_in=64, n_out=64, kw=3, use_float16=True, device=device(type='cpu'), requires_grad=False)

(relu_3): ReLU()

(conv_3): Conv2d(n_in=64, n_out=64, kw=3, use_float16=True, device=device(type='cpu'), requires_grad=False)

(relu_4): ReLU()

(conv_4): Conv2d(n_in=64, n_out=256, kw=3, use_float16=True, device=device(type='cpu'), requires_grad=False)

)

)

)

(output): Sequential(

(relu): ReLU()

(conv): Conv2d(n_in=256, n_out=6, kw=1, use_float16=True, device=device(type='cpu'), requires_grad=False)

)

)

)

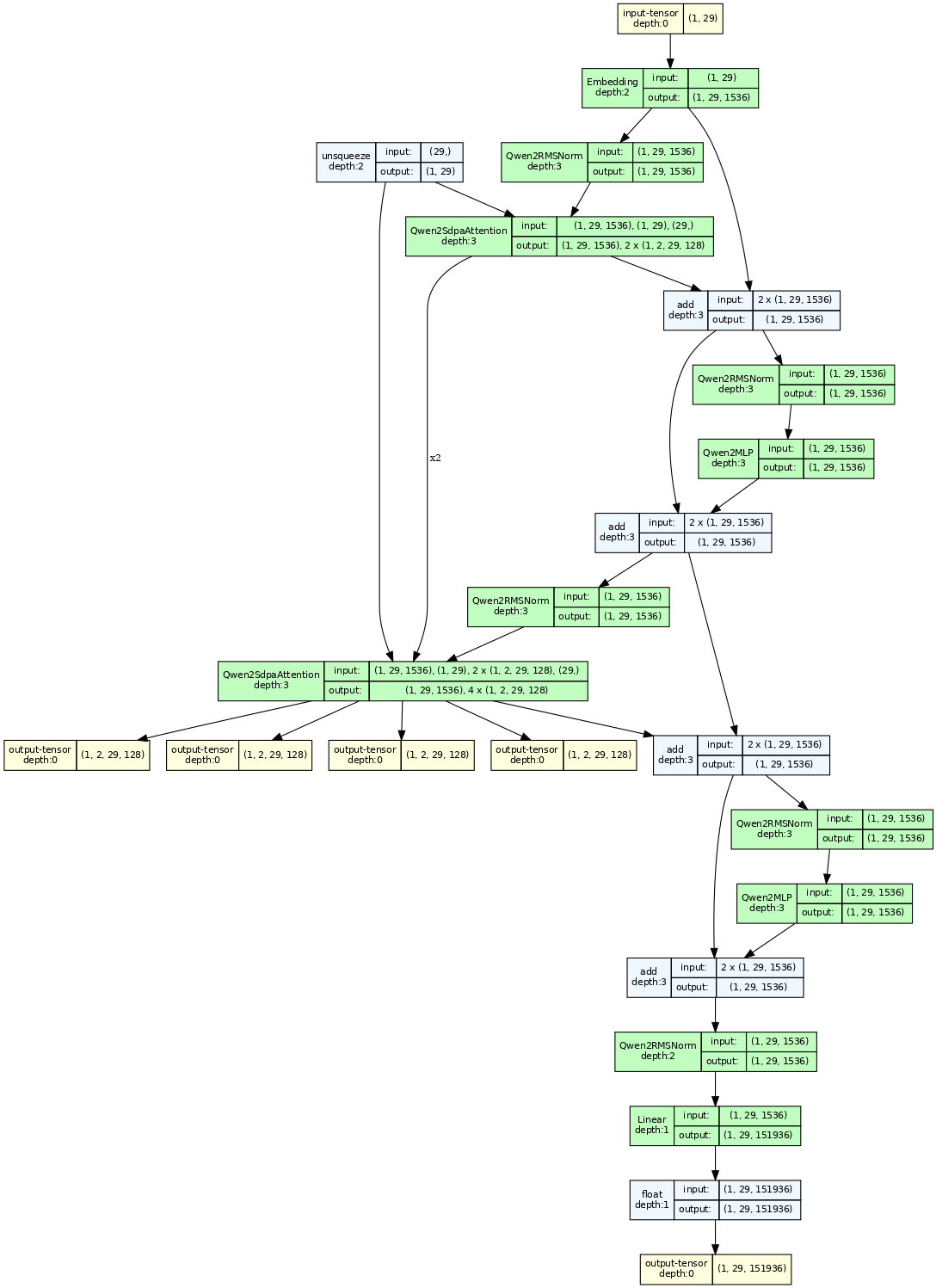

图形方式

torchview

from transformers import AutoModelForCausalLM, AutoTokenizer

device = "cuda" # the device to load the model onto

from torchview import draw_graph

import torch

from calflops import calculate_flops

from transformers import AutoModel

from transformers import AutoTokenizer

batch_size, max_seq_length = 1, 128

path="/mnt/bn/znzx-public/models/Qwen2-1.5B-Instruct"

model = AutoModelForCausalLM.from_pretrained(

path,

torch_dtype="auto",

device_map="auto"

)

tokenizer = AutoTokenizer.from_pretrained(path)

flops, macs, params = calculate_flops(model=model,

input_shape=(batch_size,max_seq_length),

transformer_tokenizer=tokenizer)

print("FLOPs:%s MACs:%s Params:%s \n" %(flops, macs, params))

prompt = "Give me a short introduction to large language model."

messages = [

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": prompt}

]

text = tokenizer.apply_chat_template(

messages,

tokenize=False,

add_generation_prompt=True

)

model_inputs = tokenizer([text], return_tensors="pt").to(device)

# print(model.model.layers)

# help(model.model.layers)

model.model.layers = torch.nn.ModuleList(model.model.layers[0:2]) # 只保留两层,防止输出太长

model_graph = draw_graph(model, input_data=model_inputs.input_ids, device=device, save_graph=True)

generated_ids = model.generate(

model_inputs.input_ids,

max_new_tokens=512

)

#out = model(model_inputs.input_ids)

#make_dot(out)

#model_graph.visual_graph

generated_ids = [

output_ids[len(input_ids):] for input_ids, output_ids in zip(model_inputs.input_ids, generated_ids)

]

response = tokenizer.batch_decode(generated_ids, skip_special_tokens=True)[0]

计算参数量

sum([param.nelement() for param in model.parameters()])

计算模型算力

model = model1024.encoder

print([ name for name, item in model1024.named_children()])

num_params = sum([param.nelement() for param in model.parameters()])

print(f"参数量:{num_params}")

#print(model)

url = "/content/drive/MyDrive/images/IMG_0567.PNG"

#x_dalle = preprocess(PIL.Image.open(url)

x_vqgan = preprocess(PIL.Image.open(url), target_image_size=1024,

map_dalle=False)

#x_dalle = x_dalle.to(DEVICE)

x_vqgan = x_vqgan.to(DEVICE)

print(x_vqgan.shape)

from thop import profile,clever_format

flops,params = profile(model, inputs=(x_vqgan,), verbose=True)

flops,params = clever_format([flops, params], "%.3f")

print("flops:", flops, "params:", params)

from calflops import calculate_flops

flops, macs, params = calculate_flops(model=model,

input_shape=(1, 3, 1024,1024),

output_as_string=True,

output_precision=4)

print("FLOPs:%s MACs:%s Params:%s \n" %(flops, macs, params))

统计minicpm flops

from transformers import AutoModelForCausalLM, AutoTokenizer

import torch

torch.manual_seed(0)

from calflops import calculate_flops

from transformers import AutoModel

from transformers import AutoTokenizer

batch_size, max_seq_length = 1, 128

#model_name = ""

#model_save = "../pretrain_models/" + model_name

path = 'openbmb/MiniCPM-2B-dpo-bf16'

model_save=path

#model = AutoModel.from_pretrained(model_save)

model = AutoModelForCausalLM.from_pretrained(path, torch_dtype=torch.bfloat16, device_map='cuda', trust_remote_code=True)

tokenizer = AutoTokenizer.from_pretrained(model_save)

flops, macs, params = calculate_flops(model=model,

input_shape=(batch_size,max_seq_length),

transformer_tokenizer=tokenizer)

print("Bert(hfl/chinese-roberta-wwm-ext) FLOPs:%s MACs:%s Params:%s \n" %(flops, macs, params))

python3 minicpm.py

/root/anaconda3/envs/minicpm/lib/python3.11/site-packages/huggingface_hub/file_download.py:1150: FutureWarning: `resume_download` is deprecated and will be removed in version 1.0.0. Downloads always resume when possible. If you want to force a new download, use `force_download=True`.

warnings.warn(

/root/anaconda3/envs/minicpm/lib/python3.11/site-packages/transformers/tokenization_utils_base.py:2654: FutureWarning: The `truncation_strategy` argument is deprecated and will be removed in a future version, use `truncation=True` to truncate examples to a max length. You can give a specific length with `max_length` (e.g. `max_length=45`) or leave max_length to None to truncate to the maximal input size of the model (e.g. 512 for Bert). If you have pairs of inputs, you can give a specific truncation strategy selected among `truncation='only_first'` (will only truncate the first sentence in the pairs) `truncation='only_second'` (will only truncate the second sentence in the pairs) or `truncation='longest_first'` (will iteratively remove tokens from the longest sentence in the pairs).

warnings.warn(

Asking to truncate to max_length but no maximum length is provided and the model has no predefined maximum length. Default to no truncation.

------------------------------------- Calculate Flops Results -------------------------------------

Notations:

number of parameters (Params), number of multiply-accumulate operations(MACs),

number of floating-point operations (FLOPs), floating-point operations per second (FLOPS),

fwd FLOPs (model forward propagation FLOPs), bwd FLOPs (model backward propagation FLOPs),

default model backpropagation takes 2.00 times as much computation as forward propagation.

Total Training Params: 2.72 B

fwd MACs: 348.76 GMACs

fwd FLOPs: 697.55 GFLOPS

fwd+bwd MACs: 1.05 TMACs

fwd+bwd FLOPs: 2.09 TFLOPS

-------------------------------- Detailed Calculated FLOPs Results --------------------------------

Each module caculated is listed after its name in the following order:

params, percentage of total params, MACs, percentage of total MACs, FLOPS, percentage of total FLOPs

Note: 1. A module can have torch.nn.module or torch.nn.functional to compute logits (e.g. CrossEntropyLoss).

They are not counted as submodules in calflops and not to be printed out. However they make up the difference between a parent's MACs and the sum of its submodules'.

2. Number of floating-point operations is a theoretical estimation, thus FLOPS computed using that could be larger than the maximum system throughput.

MiniCPMForCausalLM(

2.72 B = 100% Params, 348.76 GMACs = 100% MACs, 697.55 GFLOPS = 100% FLOPs

(model): MiniCPMModel(

2.72 B = 100% Params, 312.56 GMACs = 89.62% MACs, 625.15 GFLOPS = 89.62% FLOPs

(embed_tokens): Embedding(282.82 M = 10.38% Params, 0 MACs = 0% MACs, 0 FLOPS = 0% FLOPs, 122753, 2304)

(layers): ModuleList(

(0-39): 40 x MiniCPMDecoderLayer(

61.05 M = 2.24% Params, 7.81 GMACs = 2.24% MACs, 15.63 GFLOPS = 2.24% FLOPs

(self_attn): MiniCPMFlashAttention2(

21.23 M = 0.78% Params, 2.72 GMACs = 0.78% MACs, 5.44 GFLOPS = 0.78% FLOPs

(q_proj): Linear(5.31 M = 0.19% Params, 679.48 MMACs = 0.19% MACs, 1.36 GFLOPS = 0.19% FLOPs, in_features=2304, out_features=2304, bias=False)

(k_proj): Linear(5.31 M = 0.19% Params, 679.48 MMACs = 0.19% MACs, 1.36 GFLOPS = 0.19% FLOPs, in_features=2304, out_features=2304, bias=False)

(v_proj): Linear(5.31 M = 0.19% Params, 679.48 MMACs = 0.19% MACs, 1.36 GFLOPS = 0.19% FLOPs, in_features=2304, out_features=2304, bias=False)

(o_proj): Linear(5.31 M = 0.19% Params, 679.48 MMACs = 0.19% MACs, 1.36 GFLOPS = 0.19% FLOPs, in_features=2304, out_features=2304, bias=False)

(rotary_emb): MiniCPMRotaryEmbedding(0 = 0% Params, 0 MACs = 0% MACs, 0 FLOPS = 0% FLOPs)

)

(mlp): MiniCPMMLP(

39.81 M = 1.46% Params, 5.1 GMACs = 1.46% MACs, 10.19 GFLOPS = 1.46% FLOPs

(gate_proj): Linear(13.27 M = 0.49% Params, 1.7 GMACs = 0.49% MACs, 3.4 GFLOPS = 0.49% FLOPs, in_features=2304, out_features=5760, bias=False)

(up_proj): Linear(13.27 M = 0.49% Params, 1.7 GMACs = 0.49% MACs, 3.4 GFLOPS = 0.49% FLOPs, in_features=2304, out_features=5760, bias=False)

(down_proj): Linear(13.27 M = 0.49% Params, 1.7 GMACs = 0.49% MACs, 3.4 GFLOPS = 0.49% FLOPs, in_features=5760, out_features=2304, bias=False)

(act_fn): SiLU(0 = 0% Params, 0 MACs = 0% MACs, 737.28 KFLOPS = 0% FLOPs)

)

(input_layernorm): MiniCPMRMSNorm(2.3 K = 0% Params, 0 MACs = 0% MACs, 0 FLOPS = 0% FLOPs)

(post_attention_layernorm): MiniCPMRMSNorm(2.3 K = 0% Params, 0 MACs = 0% MACs, 0 FLOPS = 0% FLOPs)

)

)

(norm): MiniCPMRMSNorm(2.3 K = 0% Params, 0 MACs = 0% MACs, 0 FLOPS = 0% FLOPs)

)

(lm_head): Linear(282.82 M = 10.38% Params, 36.2 GMACs = 10.38% MACs, 72.4 GFLOPS = 10.38% FLOPs, in_features=2304, out_features=122753, bias=False)

)

---------------------------------------------------------------------------------------------------

Bert(hfl/chinese-roberta-wwm-ext) FLOPs:697.55 GFLOPS MACs:348.76 GMACs Params:2.72 B

统计的实际是计算128个token的flops,所以平均一个token是697/128=5.445 GFLOPS,即2.72GMACs,与参数个数基本一致。也就是说平均一个参数参与一个乘加的计算。

从图中也可以看出参数量分布:

| 名称 | 参数量 | 份数 | 总数 | | —————– | ——- | — | —— | | embedding | 282.82M | 1 | 0.282G | | transformer block | 61.05M | 40 | 2.442G | | lm_head | 282.82M | 1 | 0.282G | | 总数 | – | – | 2.724G | minicpm是embedding和lm head共享权重的。

如果我们把seq length设置成4096呢?

/root/anaconda3/envs/minicpm/lib/python3.11/site-packages/huggingface_hub/file_download.py:1150: FutureWarning: `resume_download` is deprecated and will be removed in version 1.0.0. Downloads always resume when possible. If you want to force a new download, use `force_download=True`.

warnings.warn(

/root/anaconda3/envs/minicpm/lib/python3.11/site-packages/transformers/tokenization_utils_base.py:2654: FutureWarning: The `truncation_strategy` argument is deprecated and will be removed in a future version, use `truncation=True` to truncate examples to a max length. You can give a specific length with `max_length` (e.g. `max_length=45`) or leave max_length to None to truncate to the maximal input size of the model (e.g. 512 for Bert). If you have pairs of inputs, you can give a specific truncation strategy selected among `truncation='only_first'` (will only truncate the first sentence in the pairs) `truncation='only_second'` (will only truncate the second sentence in the pairs) or `truncation='longest_first'` (will iteratively remove tokens from the longest sentence in the pairs).

warnings.warn(

Asking to truncate to max_length but no maximum length is provided and the model has no predefined maximum length. Default to no truncation.

------------------------------------- Calculate Flops Results -------------------------------------

Notations:

number of parameters (Params), number of multiply-accumulate operations(MACs),

number of floating-point operations (FLOPs), floating-point operations per second (FLOPS),

fwd FLOPs (model forward propagation FLOPs), bwd FLOPs (model backward propagation FLOPs),

default model backpropagation takes 2.00 times as much computation as forward propagation.

Total Training Params: 2.72 B

fwd MACs: 11.16 TMACs

fwd FLOPs: 22.32 TFLOPS

fwd+bwd MACs: 33.48 TMACs

fwd+bwd FLOPs: 66.96 TFLOPS

-------------------------------- Detailed Calculated FLOPs Results --------------------------------

Each module caculated is listed after its name in the following order:

params, percentage of total params, MACs, percentage of total MACs, FLOPS, percentage of total FLOPs

Note: 1. A module can have torch.nn.module or torch.nn.functional to compute logits (e.g. CrossEntropyLoss).

They are not counted as submodules in calflops and not to be printed out. However they make up the difference between a parent's MACs and the sum of its submodules'.

2. Number of floating-point operations is a theoretical estimation, thus FLOPS computed using that could be larger than the maximum system throughput.

MiniCPMForCausalLM(

2.72 B = 100% Params, 11.16 TMACs = 100% MACs, 22.32 TFLOPS = 100% FLOPs

(model): MiniCPMModel(

2.72 B = 100% Params, 10 TMACs = 89.62% MACs, 20 TFLOPS = 89.62% FLOPs

(embed_tokens): Embedding(282.82 M = 10.38% Params, 0 MACs = 0% MACs, 0 FLOPS = 0% FLOPs, 122753, 2304)

(layers): ModuleList(

(0-39): 40 x MiniCPMDecoderLayer(

61.05 M = 2.24% Params, 250.05 GMACs = 2.24% MACs, 500.12 GFLOPS = 2.24% FLOPs

(self_attn): MiniCPMFlashAttention2(

21.23 M = 0.78% Params, 86.97 GMACs = 0.78% MACs, 173.95 GFLOPS = 0.78% FLOPs

(q_proj): Linear(5.31 M = 0.19% Params, 21.74 GMACs = 0.19% MACs, 43.49 GFLOPS = 0.19% FLOPs, in_features=2304, out_features=2304, bias=False)

(k_proj): Linear(5.31 M = 0.19% Params, 21.74 GMACs = 0.19% MACs, 43.49 GFLOPS = 0.19% FLOPs, in_features=2304, out_features=2304, bias=False)

(v_proj): Linear(5.31 M = 0.19% Params, 21.74 GMACs = 0.19% MACs, 43.49 GFLOPS = 0.19% FLOPs, in_features=2304, out_features=2304, bias=False)

(o_proj): Linear(5.31 M = 0.19% Params, 21.74 GMACs = 0.19% MACs, 43.49 GFLOPS = 0.19% FLOPs, in_features=2304, out_features=2304, bias=False)

(rotary_emb): MiniCPMRotaryEmbedding(0 = 0% Params, 0 MACs = 0% MACs, 0 FLOPS = 0% FLOPs)

)

(mlp): MiniCPMMLP(

39.81 M = 1.46% Params, 163.07 GMACs = 1.46% MACs, 326.17 GFLOPS = 1.46% FLOPs

(gate_proj): Linear(13.27 M = 0.49% Params, 54.36 GMACs = 0.49% MACs, 108.72 GFLOPS = 0.49% FLOPs, in_features=2304, out_features=5760, bias=False)

(up_proj): Linear(13.27 M = 0.49% Params, 54.36 GMACs = 0.49% MACs, 108.72 GFLOPS = 0.49% FLOPs, in_features=2304, out_features=5760, bias=False)

(down_proj): Linear(13.27 M = 0.49% Params, 54.36 GMACs = 0.49% MACs, 108.72 GFLOPS = 0.49% FLOPs, in_features=5760, out_features=2304, bias=False)

(act_fn): SiLU(0 = 0% Params, 0 MACs = 0% MACs, 23.59 MFLOPS = 0% FLOPs)

)

(input_layernorm): MiniCPMRMSNorm(2.3 K = 0% Params, 0 MACs = 0% MACs, 0 FLOPS = 0% FLOPs)

(post_attention_layernorm): MiniCPMRMSNorm(2.3 K = 0% Params, 0 MACs = 0% MACs, 0 FLOPS = 0% FLOPs)

)

)

(norm): MiniCPMRMSNorm(2.3 K = 0% Params, 0 MACs = 0% MACs, 0 FLOPS = 0% FLOPs)

)

(lm_head): Linear(282.82 M = 10.38% Params, 1.16 TMACs = 10.38% MACs, 2.32 TFLOPS = 10.38% FLOPs, in_features=2304, out_features=122753, bias=False)

)

---------------------------------------------------------------------------------------------------

Bert(hfl/chinese-roberta-wwm-ext) FLOPs:22.32 TFLOPS MACs:11.16 TMACs Params:2.72 B

算出来也是2.72GMACs。

phi-3-mini

Phi3ForCausalLM(

3.82 B = 100% Params, 479.69 GMACs = 100% MACs, 959.42 GFLOPS = 100% FLOPs

(model): Phi3Model(

3.72 B = 97.42% Params, 467.08 GMACs = 97.37% MACs, 934.21 GFLOPS = 97.37% FLOPs

(embed_tokens): Embedding(98.5 M = 2.58% Params, 0 MACs = 0% MACs, 0 FLOPS = 0% FLOPs, 32064, 3072, padding_idx=32000)

(embed_dropout): Dropout(0 = 0% Params, 0 MACs = 0% MACs, 0 FLOPS = 0% FLOPs, p=0.0, inplace=False)

(layers): ModuleList(

(0-31): 32 x Phi3DecoderLayer(

113.25 M = 2.96% Params, 14.6 GMACs = 3.04% MACs, 29.19 GFLOPS = 3.04% FLOPs

(self_attn): Phi3Attention(

37.75 M = 0.99% Params, 4.93 GMACs = 1.03% MACs, 9.87 GFLOPS = 1.03% FLOPs

(o_proj): Linear(9.44 M = 0.25% Params, 1.21 GMACs = 0.25% MACs, 2.42 GFLOPS = 0.25% FLOPs, in_features=3072, out_features=3072, bias=False)

(qkv_proj): Linear(28.31 M = 0.74% Params, 3.62 GMACs = 0.76% MACs, 7.25 GFLOPS = 0.76% FLOPs, in_features=3072, out_features=9216, bias=False)

(rotary_emb): Phi3RotaryEmbedding(0 = 0% Params, 0 MACs = 0% MACs, 0 FLOPS = 0% FLOPs)

)

(mlp): Phi3MLP(

75.5 M = 1.98% Params, 9.66 GMACs = 2.01% MACs, 19.33 GFLOPS = 2.01% FLOPs

(gate_up_proj): Linear(50.33 M = 1.32% Params, 6.44 GMACs = 1.34% MACs, 12.88 GFLOPS = 1.34% FLOPs, in_features=3072, out_features=16384, bias=False)

(down_proj): Linear(25.17 M = 0.66% Params, 3.22 GMACs = 0.67% MACs, 6.44 GFLOPS = 0.67% FLOPs, in_features=8192, out_features=3072, bias=False)

(activation_fn): SiLU(0 = 0% Params, 0 MACs = 0% MACs, 1.05 MFLOPS = 0% FLOPs)

)

(input_layernorm): Phi3RMSNorm(3.07 K = 0% Params, 0 MACs = 0% MACs, 0 FLOPS = 0% FLOPs)

(resid_attn_dropout): Dropout(0 = 0% Params, 0 MACs = 0% MACs, 0 FLOPS = 0% FLOPs, p=0.0, inplace=False)

(resid_mlp_dropout): Dropout(0 = 0% Params, 0 MACs = 0% MACs, 0 FLOPS = 0% FLOPs, p=0.0, inplace=False)

(post_attention_layernorm): Phi3RMSNorm(3.07 K = 0% Params, 0 MACs = 0% MACs, 0 FLOPS = 0% FLOPs)

)

)

(norm): Phi3RMSNorm(3.07 K = 0% Params, 0 MACs = 0% MACs, 0 FLOPS = 0% FLOPs)

)

(lm_head): Linear(98.5 M = 2.58% Params, 12.61 GMACs = 2.63% MACs, 25.22 GFLOPS = 2.63% FLOPs, in_features=3072, out_features=32064, bias=False)

)

---------------------------------------------------------------------------------------------------

FLOPs:959.42 GFLOPS MACs:479.69 GMACs Params:3.82 B

phi-3.5-mini

架构没有变化

Total Training Params: 3.82 B

fwd MACs: 479.69 GMACs

fwd FLOPs: 959.42 GFLOPS

fwd+bwd MACs: 1.44 TMACs

fwd+bwd FLOPs: 2.88 TFLOPS

-------------------------------- Detailed Calculated FLOPs Results --------------------------------

Each module caculated is listed after its name in the following order:

params, percentage of total params, MACs, percentage of total MACs, FLOPS, percentage of total FLOPs

Note: 1. A module can have torch.nn.module or torch.nn.functional to compute logits (e.g. CrossEntropyLoss).

They are not counted as submodules in calflops and not to be printed out. However they make up the difference between a parent's MACs and the sum of its submodules'.

2. Number of floating-point operations is a theoretical estimation, thus FLOPS computed using that could be larger than the maximum system throughput.

Phi3ForCausalLM(

3.82 B = 100% Params, 479.69 GMACs = 100% MACs, 959.42 GFLOPS = 100% FLOPs

(model): Phi3Model(

3.72 B = 97.42% Params, 467.08 GMACs = 97.37% MACs, 934.21 GFLOPS = 97.37% FLOPs

(embed_tokens): Embedding(98.5 M = 2.58% Params, 0 MACs = 0% MACs, 0 FLOPS = 0% FLOPs, 32064, 3072, padding_idx=32000)

(embed_dropout): Dropout(0 = 0% Params, 0 MACs = 0% MACs, 0 FLOPS = 0% FLOPs, p=0.0, inplace=False)

(layers): ModuleList(

(0-31): 32 x Phi3DecoderLayer(

113.25 M = 2.96% Params, 14.6 GMACs = 3.04% MACs, 29.19 GFLOPS = 3.04% FLOPs

(self_attn): Phi3Attention(

37.75 M = 0.99% Params, 4.93 GMACs = 1.03% MACs, 9.87 GFLOPS = 1.03% FLOPs

(o_proj): Linear(9.44 M = 0.25% Params, 1.21 GMACs = 0.25% MACs, 2.42 GFLOPS = 0.25% FLOPs, in_features=3072, out_features=3072, bias=False)

(qkv_proj): Linear(28.31 M = 0.74% Params, 3.62 GMACs = 0.76% MACs, 7.25 GFLOPS = 0.76% FLOPs, in_features=3072, out_features=9216, bias=False)

(rotary_emb): Phi3LongRoPEScaledRotaryEmbedding(0 = 0% Params, 0 MACs = 0% MACs, 0 FLOPS = 0% FLOPs)

)

(mlp): Phi3MLP(

75.5 M = 1.98% Params, 9.66 GMACs = 2.01% MACs, 19.33 GFLOPS = 2.01% FLOPs

(gate_up_proj): Linear(50.33 M = 1.32% Params, 6.44 GMACs = 1.34% MACs, 12.88 GFLOPS = 1.34% FLOPs, in_features=3072, out_features=16384, bias=False)

(down_proj): Linear(25.17 M = 0.66% Params, 3.22 GMACs = 0.67% MACs, 6.44 GFLOPS = 0.67% FLOPs, in_features=8192, out_features=3072, bias=False)

(activation_fn): SiLU(0 = 0% Params, 0 MACs = 0% MACs, 1.05 MFLOPS = 0% FLOPs)

)

(input_layernorm): Phi3RMSNorm(3.07 K = 0% Params, 0 MACs = 0% MACs, 0 FLOPS = 0% FLOPs)

(resid_attn_dropout): Dropout(0 = 0% Params, 0 MACs = 0% MACs, 0 FLOPS = 0% FLOPs, p=0.0, inplace=False)

(resid_mlp_dropout): Dropout(0 = 0% Params, 0 MACs = 0% MACs, 0 FLOPS = 0% FLOPs, p=0.0, inplace=False)

(post_attention_layernorm): Phi3RMSNorm(3.07 K = 0% Params, 0 MACs = 0% MACs, 0 FLOPS = 0% FLOPs)

)

)

(norm): Phi3RMSNorm(3.07 K = 0% Params, 0 MACs = 0% MACs, 0 FLOPS = 0% FLOPs)

)

(lm_head): Linear(98.5 M = 2.58% Params, 12.61 GMACs = 2.63% MACs, 25.22 GFLOPS = 2.63% FLOPs, in_features=3072, out_features=32064, bias=False)

)

---------------------------------------------------------------------------------------------------

FLOPs:959.42 GFLOPS MACs:479.69 GMACs Params:3.82 B

qwen2-1.5B

Total Training Params: 1.54 B

fwd MACs: 197.58 GMACs

fwd FLOPs: 395.19 GFLOPS

fwd+bwd MACs: 592.73 GMACs

fwd+bwd FLOPs: 1.19 TFLOPS

-------------------------------- Detailed Calculated FLOPs Results --------------------------------

Each module caculated is listed after its name in the following order:

params, percentage of total params, MACs, percentage of total MACs, FLOPS, percentage of total FLOPs

Note: 1. A module can have torch.nn.module or torch.nn.functional to compute logits (e.g. CrossEntropyLoss).

They are not counted as submodules in calflops and not to be printed out. However they make up the difference between a parent's MACs and the sum of its submodules'.

2. Number of floating-point operations is a theoretical estimation, thus FLOPS computed using that could be larger than the maximum system throughput.

Qwen2ForCausalLM(

1.54 B = 100% Params, 197.58 GMACs = 100% MACs, 395.19 GFLOPS = 100% FLOPs

(model): Qwen2Model(

1.54 B = 100% Params, 167.71 GMACs = 84.88% MACs, 335.44 GFLOPS = 84.88% FLOPs

(embed_tokens): Embedding(233.37 M = 15.12% Params, 0 MACs = 0% MACs, 0 FLOPS = 0% FLOPs, 151936, 1536)

(layers): ModuleList(

(0-27): 28 x Qwen2DecoderLayer(

46.8 M = 3.03% Params, 5.99 GMACs = 3.03% MACs, 11.98 GFLOPS = 3.03% FLOPs

(self_attn): Qwen2SdpaAttention(

5.51 M = 0.36% Params, 704.64 MMACs = 0.36% MACs, 1.41 GFLOPS = 0.36% FLOPs

(q_proj): Linear(2.36 M = 0.15% Params, 301.99 MMACs = 0.15% MACs, 603.98 MFLOPS = 0.15% FLOPs, in_features=1536, out_features=1536, bias=True)

(k_proj): Linear(393.47 K = 0.03% Params, 50.33 MMACs = 0.03% MACs, 100.66 MFLOPS = 0.03% FLOPs, in_features=1536, out_features=256, bias=True)

(v_proj): Linear(393.47 K = 0.03% Params, 50.33 MMACs = 0.03% MACs, 100.66 MFLOPS = 0.03% FLOPs, in_features=1536, out_features=256, bias=True)

(o_proj): Linear(2.36 M = 0.15% Params, 301.99 MMACs = 0.15% MACs, 603.98 MFLOPS = 0.15% FLOPs, in_features=1536, out_features=1536, bias=False)

(rotary_emb): Qwen2RotaryEmbedding(0 = 0% Params, 0 MACs = 0% MACs, 0 FLOPS = 0% FLOPs)

)

(mlp): Qwen2MLP(

41.29 M = 2.67% Params, 5.28 GMACs = 2.67% MACs, 10.57 GFLOPS = 2.67% FLOPs

(gate_proj): Linear(13.76 M = 0.89% Params, 1.76 GMACs = 0.89% MACs, 3.52 GFLOPS = 0.89% FLOPs, in_features=1536, out_features=8960, bias=False)

(up_proj): Linear(13.76 M = 0.89% Params, 1.76 GMACs = 0.89% MACs, 3.52 GFLOPS = 0.89% FLOPs, in_features=1536, out_features=8960, bias=False)

(down_proj): Linear(13.76 M = 0.89% Params, 1.76 GMACs = 0.89% MACs, 3.52 GFLOPS = 0.89% FLOPs, in_features=8960, out_features=1536, bias=False)

(act_fn): SiLU(0 = 0% Params, 0 MACs = 0% MACs, 1.15 MFLOPS = 0% FLOPs)

)

(input_layernorm): Qwen2RMSNorm(1.54 K = 0% Params, 0 MACs = 0% MACs, 0 FLOPS = 0% FLOPs, (1536,), eps=1e-06)

(post_attention_layernorm): Qwen2RMSNorm(1.54 K = 0% Params, 0 MACs = 0% MACs, 0 FLOPS = 0% FLOPs, (1536,), eps=1e-06)

)

)

(norm): Qwen2RMSNorm(1.54 K = 0% Params, 0 MACs = 0% MACs, 0 FLOPS = 0% FLOPs, (1536,), eps=1e-06)

)

(lm_head): Linear(233.37 M = 15.12% Params, 29.87 GMACs = 15.12% MACs, 59.74 GFLOPS = 15.12% FLOPs, in_features=1536, out_features=151936, bias=False)

)

---------------------------------------------------------------------------------------------------

FLOPs:395.19 GFLOPS MACs:197.58 GMACs Params:1.54 B

phi-3-small

------------------------------------- Calculate Flops Results -------------------------------------

Notations:

number of parameters (Params), number of multiply-accumulate operations(MACs),

number of floating-point operations (FLOPs), floating-point operations per second (FLOPS),

fwd FLOPs (model forward propagation FLOPs), bwd FLOPs (model backward propagation FLOPs),

default model backpropagation takes 2.00 times as much computation as forward propagation.

Total Training Params: 7.39 B

fwd MACs: 945.97 GMACs

fwd FLOPs: 1.89 TFLOPS

fwd+bwd MACs: 2.84 TMACs

fwd+bwd FLOPs: 5.68 TFLOPS

-------------------------------- Detailed Calculated FLOPs Results --------------------------------

Each module caculated is listed after its name in the following order:

params, percentage of total params, MACs, percentage of total MACs, FLOPS, percentage of total FLOPs

Note: 1. A module can have torch.nn.module or torch.nn.functional to compute logits (e.g. CrossEntropyLoss).

They are not counted as submodules in calflops and not to be printed out. However they make up the difference between a parent's MACs and the sum of its submodules'.

2. Number of floating-point operations is a theoretical estimation, thus FLOPS computed using that could be larger than the maximum system throughput.

Phi3SmallForCausalLM(

7.39 B = 100% Params, 945.97 GMACs = 100% MACs, 1.89 TFLOPS = 100% FLOPs

(model): Phi3SmallModel(

7.39 B = 100% Params, 893.35 GMACs = 94.44% MACs, 1.79 TFLOPS = 94.44% FLOPs

(embed_tokens): Embedding(411.04 M = 5.56% Params, 0 MACs = 0% MACs, 0 FLOPS = 0% FLOPs, 100352, 4096)

(embedding_dropout): Dropout(0 = 0% Params, 0 MACs = 0% MACs, 0 FLOPS = 0% FLOPs, p=0.1, inplace=False)

(layers): ModuleList(

(0): Phi3SmallDecoderLayer(

218.16 M = 2.95% Params, 27.92 GMACs = 2.95% MACs, 55.84 GFLOPS = 2.95% FLOPs

(self_attn): Phi3SmallSelfAttention(

41.95 M = 0.57% Params, 5.37 GMACs = 0.57% MACs, 10.74 GFLOPS = 0.57% FLOPs

(query_key_value): Linear(25.17 M = 0.34% Params, 3.22 GMACs = 0.34% MACs, 6.44 GFLOPS = 0.34% FLOPs, in_features=4096, out_features=6144, bias=True)

(dense): Linear(16.78 M = 0.23% Params, 2.15 GMACs = 0.23% MACs, 4.29 GFLOPS = 0.23% FLOPs, in_features=4096, out_features=4096, bias=True)

(_blocksparse_layer): BlockSparseAttentionLayer(0 = 0% Params, 0 MACs = 0% MACs, 0 FLOPS = 0% FLOPs)

(rotary_emb): RotaryEmbedding(0 = 0% Params, 0 MACs = 0% MACs, 0 FLOPS = 0% FLOPs)

)

(mlp): Phi3SmallMLP(

176.19 M = 2.38% Params, 22.55 GMACs = 2.38% MACs, 45.1 GFLOPS = 2.38% FLOPs

(up_proj): Linear(117.47 M = 1.59% Params, 15.03 GMACs = 1.59% MACs, 30.06 GFLOPS = 1.59% FLOPs, in_features=4096, out_features=28672, bias=True)

(down_proj): Linear(58.72 M = 0.79% Params, 7.52 GMACs = 0.79% MACs, 15.03 GFLOPS = 0.79% FLOPs, in_features=14336, out_features=4096, bias=True)

(dropout): Dropout(0 = 0% Params, 0 MACs = 0% MACs, 0 FLOPS = 0% FLOPs, p=0.1, inplace=False)

)

(input_layernorm): LayerNorm(8.19 K = 0% Params, 0 MACs = 0% MACs, 2.62 MFLOPS = 0% FLOPs, (4096,), eps=1e-05, elementwise_affine=True)

(post_attention_layernorm): LayerNorm(8.19 K = 0% Params, 0 MACs = 0% MACs, 2.62 MFLOPS = 0% FLOPs, (4096,), eps=1e-05, elementwise_affine=True)

)

(1): Phi3SmallDecoderLayer(

218.16 M = 2.95% Params, 27.92 GMACs = 2.95% MACs, 55.84 GFLOPS = 2.95% FLOPs

(self_attn): Phi3SmallSelfAttention(

41.95 M = 0.57% Params, 5.37 GMACs = 0.57% MACs, 10.74 GFLOPS = 0.57% FLOPs

(query_key_value): Linear(25.17 M = 0.34% Params, 3.22 GMACs = 0.34% MACs, 6.44 GFLOPS = 0.34% FLOPs, in_features=4096, out_features=6144, bias=True)

(dense): Linear(16.78 M = 0.23% Params, 2.15 GMACs = 0.23% MACs, 4.29 GFLOPS = 0.23% FLOPs, in_features=4096, out_features=4096, bias=True)

(rotary_emb): RotaryEmbedding(0 = 0% Params, 0 MACs = 0% MACs, 0 FLOPS = 0% FLOPs)

)

(mlp): Phi3SmallMLP(

176.19 M = 2.38% Params, 22.55 GMACs = 2.38% MACs, 45.1 GFLOPS = 2.38% FLOPs

(up_proj): Linear(117.47 M = 1.59% Params, 15.03 GMACs = 1.59% MACs, 30.06 GFLOPS = 1.59% FLOPs, in_features=4096, out_features=28672, bias=True)

(down_proj): Linear(58.72 M = 0.79% Params, 7.52 GMACs = 0.79% MACs, 15.03 GFLOPS = 0.79% FLOPs, in_features=14336, out_features=4096, bias=True)

(dropout): Dropout(0 = 0% Params, 0 MACs = 0% MACs, 0 FLOPS = 0% FLOPs, p=0.1, inplace=False)

)

(input_layernorm): LayerNorm(8.19 K = 0% Params, 0 MACs = 0% MACs, 2.62 MFLOPS = 0% FLOPs, (4096,), eps=1e-05, elementwise_affine=True)

(post_attention_layernorm): LayerNorm(8.19 K = 0% Params, 0 MACs = 0% MACs, 2.62 MFLOPS = 0% FLOPs, (4096,), eps=1e-05, elementwise_affine=True)

)

(2): Phi3SmallDecoderLayer(

218.16 M = 2.95% Params, 27.92 GMACs = 2.95% MACs, 55.84 GFLOPS = 2.95% FLOPs

(self_attn): Phi3SmallSelfAttention(

41.95 M = 0.57% Params, 5.37 GMACs = 0.57% MACs, 10.74 GFLOPS = 0.57% FLOPs

(query_key_value): Linear(25.17 M = 0.34% Params, 3.22 GMACs = 0.34% MACs, 6.44 GFLOPS = 0.34% FLOPs, in_features=4096, out_features=6144, bias=True)

(dense): Linear(16.78 M = 0.23% Params, 2.15 GMACs = 0.23% MACs, 4.29 GFLOPS = 0.23% FLOPs, in_features=4096, out_features=4096, bias=True)

(_blocksparse_layer): BlockSparseAttentionLayer(0 = 0% Params, 0 MACs = 0% MACs, 0 FLOPS = 0% FLOPs)

(rotary_emb): RotaryEmbedding(0 = 0% Params, 0 MACs = 0% MACs, 0 FLOPS = 0% FLOPs)

)

(mlp): Phi3SmallMLP(

176.19 M = 2.38% Params, 22.55 GMACs = 2.38% MACs, 45.1 GFLOPS = 2.38% FLOPs

(up_proj): Linear(117.47 M = 1.59% Params, 15.03 GMACs = 1.59% MACs, 30.06 GFLOPS = 1.59% FLOPs, in_features=4096, out_features=28672, bias=True)

(down_proj): Linear(58.72 M = 0.79% Params, 7.52 GMACs = 0.79% MACs, 15.03 GFLOPS = 0.79% FLOPs, in_features=14336, out_features=4096, bias=True)

(dropout): Dropout(0 = 0% Params, 0 MACs = 0% MACs, 0 FLOPS = 0% FLOPs, p=0.1, inplace=False)

)

(input_layernorm): LayerNorm(8.19 K = 0% Params, 0 MACs = 0% MACs, 2.62 MFLOPS = 0% FLOPs, (4096,), eps=1e-05, elementwise_affine=True)

(post_attention_layernorm): LayerNorm(8.19 K = 0% Params, 0 MACs = 0% MACs, 2.62 MFLOPS = 0% FLOPs, (4096,), eps=1e-05, elementwise_affine=True)

)

(3): Phi3SmallDecoderLayer(

218.16 M = 2.95% Params, 27.92 GMACs = 2.95% MACs, 55.84 GFLOPS = 2.95% FLOPs

(self_attn): Phi3SmallSelfAttention(

41.95 M = 0.57% Params, 5.37 GMACs = 0.57% MACs, 10.74 GFLOPS = 0.57% FLOPs

(query_key_value): Linear(25.17 M = 0.34% Params, 3.22 GMACs = 0.34% MACs, 6.44 GFLOPS = 0.34% FLOPs, in_features=4096, out_features=6144, bias=True)

(dense): Linear(16.78 M = 0.23% Params, 2.15 GMACs = 0.23% MACs, 4.29 GFLOPS = 0.23% FLOPs, in_features=4096, out_features=4096, bias=True)

(rotary_emb): RotaryEmbedding(0 = 0% Params, 0 MACs = 0% MACs, 0 FLOPS = 0% FLOPs)

)

(mlp): Phi3SmallMLP(

176.19 M = 2.38% Params, 22.55 GMACs = 2.38% MACs, 45.1 GFLOPS = 2.38% FLOPs

(up_proj): Linear(117.47 M = 1.59% Params, 15.03 GMACs = 1.59% MACs, 30.06 GFLOPS = 1.59% FLOPs, in_features=4096, out_features=28672, bias=True)

(down_proj): Linear(58.72 M = 0.79% Params, 7.52 GMACs = 0.79% MACs, 15.03 GFLOPS = 0.79% FLOPs, in_features=14336, out_features=4096, bias=True)

(dropout): Dropout(0 = 0% Params, 0 MACs = 0% MACs, 0 FLOPS = 0% FLOPs, p=0.1, inplace=False)

)

(input_layernorm): LayerNorm(8.19 K = 0% Params, 0 MACs = 0% MACs, 2.62 MFLOPS = 0% FLOPs, (4096,), eps=1e-05, elementwise_affine=True)

(post_attention_layernorm): LayerNorm(8.19 K = 0% Params, 0 MACs = 0% MACs, 2.62 MFLOPS = 0% FLOPs, (4096,), eps=1e-05, elementwise_affine=True)

)

(4): Phi3SmallDecoderLayer(

218.16 M = 2.95% Params, 27.92 GMACs = 2.95% MACs, 55.84 GFLOPS = 2.95% FLOPs

(self_attn): Phi3SmallSelfAttention(

41.95 M = 0.57% Params, 5.37 GMACs = 0.57% MACs, 10.74 GFLOPS = 0.57% FLOPs

(query_key_value): Linear(25.17 M = 0.34% Params, 3.22 GMACs = 0.34% MACs, 6.44 GFLOPS = 0.34% FLOPs, in_features=4096, out_features=6144, bias=True)

(dense): Linear(16.78 M = 0.23% Params, 2.15 GMACs = 0.23% MACs, 4.29 GFLOPS = 0.23% FLOPs, in_features=4096, out_features=4096, bias=True)

(_blocksparse_layer): BlockSparseAttentionLayer(0 = 0% Params, 0 MACs = 0% MACs, 0 FLOPS = 0% FLOPs)

(rotary_emb): RotaryEmbedding(0 = 0% Params, 0 MACs = 0% MACs, 0 FLOPS = 0% FLOPs)

)

(mlp): Phi3SmallMLP(

176.19 M = 2.38% Params, 22.55 GMACs = 2.38% MACs, 45.1 GFLOPS = 2.38% FLOPs

(up_proj): Linear(117.47 M = 1.59% Params, 15.03 GMACs = 1.59% MACs, 30.06 GFLOPS = 1.59% FLOPs, in_features=4096, out_features=28672, bias=True)

(down_proj): Linear(58.72 M = 0.79% Params, 7.52 GMACs = 0.79% MACs, 15.03 GFLOPS = 0.79% FLOPs, in_features=14336, out_features=4096, bias=True)

(dropout): Dropout(0 = 0% Params, 0 MACs = 0% MACs, 0 FLOPS = 0% FLOPs, p=0.1, inplace=False)

)

(input_layernorm): LayerNorm(8.19 K = 0% Params, 0 MACs = 0% MACs, 2.62 MFLOPS = 0% FLOPs, (4096,), eps=1e-05, elementwise_affine=True)

(post_attention_layernorm): LayerNorm(8.19 K = 0% Params, 0 MACs = 0% MACs, 2.62 MFLOPS = 0% FLOPs, (4096,), eps=1e-05, elementwise_affine=True)

)

(5): Phi3SmallDecoderLayer(

218.16 M = 2.95% Params, 27.92 GMACs = 2.95% MACs, 55.84 GFLOPS = 2.95% FLOPs

(self_attn): Phi3SmallSelfAttention(

41.95 M = 0.57% Params, 5.37 GMACs = 0.57% MACs, 10.74 GFLOPS = 0.57% FLOPs

(query_key_value): Linear(25.17 M = 0.34% Params, 3.22 GMACs = 0.34% MACs, 6.44 GFLOPS = 0.34% FLOPs, in_features=4096, out_features=6144, bias=True)

(dense): Linear(16.78 M = 0.23% Params, 2.15 GMACs = 0.23% MACs, 4.29 GFLOPS = 0.23% FLOPs, in_features=4096, out_features=4096, bias=True)

(rotary_emb): RotaryEmbedding(0 = 0% Params, 0 MACs = 0% MACs, 0 FLOPS = 0% FLOPs)

)

(mlp): Phi3SmallMLP(

176.19 M = 2.38% Params, 22.55 GMACs = 2.38% MACs, 45.1 GFLOPS = 2.38% FLOPs

(up_proj): Linear(117.47 M = 1.59% Params, 15.03 GMACs = 1.59% MACs, 30.06 GFLOPS = 1.59% FLOPs, in_features=4096, out_features=28672, bias=True)

(down_proj): Linear(58.72 M = 0.79% Params, 7.52 GMACs = 0.79% MACs, 15.03 GFLOPS = 0.79% FLOPs, in_features=14336, out_features=4096, bias=True)

(dropout): Dropout(0 = 0% Params, 0 MACs = 0% MACs, 0 FLOPS = 0% FLOPs, p=0.1, inplace=False)

)

(input_layernorm): LayerNorm(8.19 K = 0% Params, 0 MACs = 0% MACs, 2.62 MFLOPS = 0% FLOPs, (4096,), eps=1e-05, elementwise_affine=True)

(post_attention_layernorm): LayerNorm(8.19 K = 0% Params, 0 MACs = 0% MACs, 2.62 MFLOPS = 0% FLOPs, (4096,), eps=1e-05, elementwise_affine=True)

)

(6): Phi3SmallDecoderLayer(

218.16 M = 2.95% Params, 27.92 GMACs = 2.95% MACs, 55.84 GFLOPS = 2.95% FLOPs

(self_attn): Phi3SmallSelfAttention(

41.95 M = 0.57% Params, 5.37 GMACs = 0.57% MACs, 10.74 GFLOPS = 0.57% FLOPs

(query_key_value): Linear(25.17 M = 0.34% Params, 3.22 GMACs = 0.34% MACs, 6.44 GFLOPS = 0.34% FLOPs, in_features=4096, out_features=6144, bias=True)